Results from Main web retrieved at 23:45 (GMT)

These are some useful packages for the laptops:

Development:

- subversion

- mercurial (hg)

- git

- texlive-full

- cmake

- build-essential

- opencv 2.0.0-4

- gdb

- gprofiler/etc

- ssh

Mobile Robots:

- Cyton (arm software) - http://robots.mobilerobots.com/wiki/Cyton_7DOF_Arm_Software

- Mobile Sim

- Aria

- ARNL

- GPS localization

- MobileEyes

- Joystick Driver, etc...

Player/Stage:

- this whole mess

ETC...

- Ogre ODE

- Blender

- RobotTestSuite

- boost libraries

My Links

- WelcomeGuest - starting points on TWiki

- TWikiUsersGuide - complete TWiki documentation, Quick Start to Reference

- Sandbox - try out TWiki on your own

- AlexCohenSandbox - just for me

My Personal Preferences

- Preference for the editor, default is the WYSIWYG editor. The options are raw, wysiwyg:

- Set EDITMETHOD = wysiwyg

- Fixed pulldown menu-bar, on or off. If off, the menu-bar hides automatically when scrolling.

- Set FIXEDTOPMENU = off

- Show tool-tip topic info on mouse-over of WikiWord links, on or off:

- Set LINKTOOLTIPINFO = off

- More preferences

TWiki has system wide preferences settings defined in TWikiPreferences. You can customize preferences settings to your needs: To overload a system setting, (1) do a "raw view" on TWikiPreferences, (2) copy a

TWiki has system wide preferences settings defined in TWikiPreferences. You can customize preferences settings to your needs: To overload a system setting, (1) do a "raw view" on TWikiPreferences, (2) copy a Set VARIABLE = valuebullet, (3) do a "raw edit" of your user profile page, (4) add the bullet to the bullet list above, and (5) customize the value as needed. Make sure the settings render as real bullets (in "raw edit", a bullet requires 3 or 6 spaces before the asterisk).

Related Topics

- ChangePassword for changing your password

- ChangeEmailAddress for changing your email address

- UserList has a list of other TWiki users

- UserDocumentationCategory is a list of TWiki user documentation

- UserToolsCategory lists all TWiki user tools

» All Authenticated Users Group

This is a special group all authenticated users belong. The main use of this group is to lift a web level restriction at the topic level. This is close to AllUsersGroup. The difference is that unauthenticated users belong to AllUsersGroup but not to AllAuthUsersGroup. Let's say a web is viewable only by the members of the DarkSideGroup by the following line on WebPreferences.* Set ALLOWWEBVIEW = Main.DarkSideGroupBy putting the following line on a topic, you can make it viewable by anybody authenticated.

* Set ALLOWTOPICVIEW = Main.AllAuthUsersGroupThis topic is not necessary for the group to work because the group is implemented in the code instead of a topic that has members. Related topics: TWikiGroups, AllUsersGroup, TWikiAccessControl

» All Users Group

This is a special group literally all users belong. The main use of this group is to lift a web level restriction at the topic level. This is close to AllAuthUsersGroup. The difference is that unauthenticated users belong to AllUsersGroup but not to AllAuthUsersGroup. Let's say a web is viewable only by the members of the DarkSideGroup by the following line on WebPreferences.* Set ALLOWWEBVIEW = Main.DarkSideGroupBy putting the following line on a topic, you can make it viewable by anybody.

* Set ALLOWTOPICVIEW = Main.AllUsersGroupThis topic is not necessary for the group to work because the group is implemented in the code instead of a topic that has members. Related topics: TWikiGroups, AllAuthUsersGroup, TWikiAccessControl

My Links

- WelcomeGuest - starting points on TWiki

- TWikiUsersGuide - complete TWiki documentation, Quick Start to Reference

- Sandbox - try out TWiki on your own

- AnneMarieBogarSandbox - just for me

My Personal Preferences

- Preference for the editor, default is the WYSIWYG editor. The options are raw, wysiwyg:

- Set EDITMETHOD = wysiwyg

- Fixed pulldown menu-bar, on or off. If off, the menu-bar hides automatically when scrolling.

- Set FIXEDTOPMENU = off

- Show tool-tip topic info on mouse-over of WikiWord links, on or off:

- Set LINKTOOLTIPINFO = off

- More preferences

TWiki has system wide preferences settings defined in TWikiPreferences. You can customize preferences settings to your needs: To overload a system setting, (1) do a "raw view" on TWikiPreferences, (2) copy a

TWiki has system wide preferences settings defined in TWikiPreferences. You can customize preferences settings to your needs: To overload a system setting, (1) do a "raw view" on TWikiPreferences, (2) copy a Set VARIABLE = valuebullet, (3) do a "raw edit" of your user profile page, (4) add the bullet to the bullet list above, and (5) customize the value as needed. Make sure the settings render as real bullets (in "raw edit", a bullet requires 3 or 6 spaces before the asterisk).

Related Topics

- ChangePassword for changing your password

- ChangeEmailAddress for changing your email address

- UserList has a list of other TWiki users

- UserDocumentationCategory is a list of TWiki user documentation

- UserToolsCategory lists all TWiki user tools

My Links

- WelcomeGuest - starting points on TWiki

- TWikiUsersGuide - complete TWiki documentation, Quick Start to Reference

- Sandbox - try out TWiki on your own

- AnthonyWAScanSandbox - just for me

My Personal Preferences

- Preference for the editor, default is the WYSIWYG editor. The options are raw, wysiwyg:

- Set EDITMETHOD = wysiwyg

- Fixed pulldown menu-bar, on or off. If off, the menu-bar hides automatically when scrolling.

- Set FIXEDTOPMENU = off

- Show tool-tip topic info on mouse-over of WikiWord links, on or off:

- Set LINKTOOLTIPINFO = off

- More preferences

TWiki has system wide preferences settings defined in TWikiPreferences. You can customize preferences settings to your needs: To overload a system setting, (1) do a "raw view" on TWikiPreferences, (2) copy a

TWiki has system wide preferences settings defined in TWikiPreferences. You can customize preferences settings to your needs: To overload a system setting, (1) do a "raw view" on TWikiPreferences, (2) copy a Set VARIABLE = valuebullet, (3) do a "raw edit" of your user profile page, (4) add the bullet to the bullet list above, and (5) customize the value as needed. Make sure the settings render as real bullets (in "raw edit", a bullet requires 3 or 6 spaces before the asterisk).

Related Topics

- ChangePassword for changing your password

- ChangeEmailAddress for changing your email address

- UserList has a list of other TWiki users

- UserDocumentationCategory is a list of TWiki user documentation

- UserToolsCategory lists all TWiki user tools

My Links

- WelcomeGuest - starting points on TWiki

- TWikiUsersGuide - complete TWiki documentation, Quick Start to Reference

- Sandbox - try out TWiki on your own

- ArmandoCalifanoSandbox - just for me

My Personal Preferences

- Preference for the editor, default is the WYSIWYG editor. The options are raw, wysiwyg:

- Set EDITMETHOD = wysiwyg

- Fixed pulldown menu-bar, on or off. If off, the menu-bar hides automatically when scrolling.

- Set FIXEDTOPMENU = off

- Show tool-tip topic info on mouse-over of WikiWord links, on or off:

- Set LINKTOOLTIPINFO = off

- More preferences

TWiki has system wide preferences settings defined in TWikiPreferences. You can customize preferences settings to your needs: To overload a system setting, (1) do a "raw view" on TWikiPreferences, (2) copy a

TWiki has system wide preferences settings defined in TWikiPreferences. You can customize preferences settings to your needs: To overload a system setting, (1) do a "raw view" on TWikiPreferences, (2) copy a Set VARIABLE = valuebullet, (3) do a "raw edit" of your user profile page, (4) add the bullet to the bullet list above, and (5) customize the value as needed. Make sure the settings render as real bullets (in "raw edit", a bullet requires 3 or 6 spaces before the asterisk).

Related Topics

- ChangePassword for changing your password

- ChangeEmailAddress for changing your email address

- UserList has a list of other TWiki users

- UserDocumentationCategory is a list of TWiki user documentation

- UserToolsCategory lists all TWiki user tools

BumbleBee2 ROS Driver

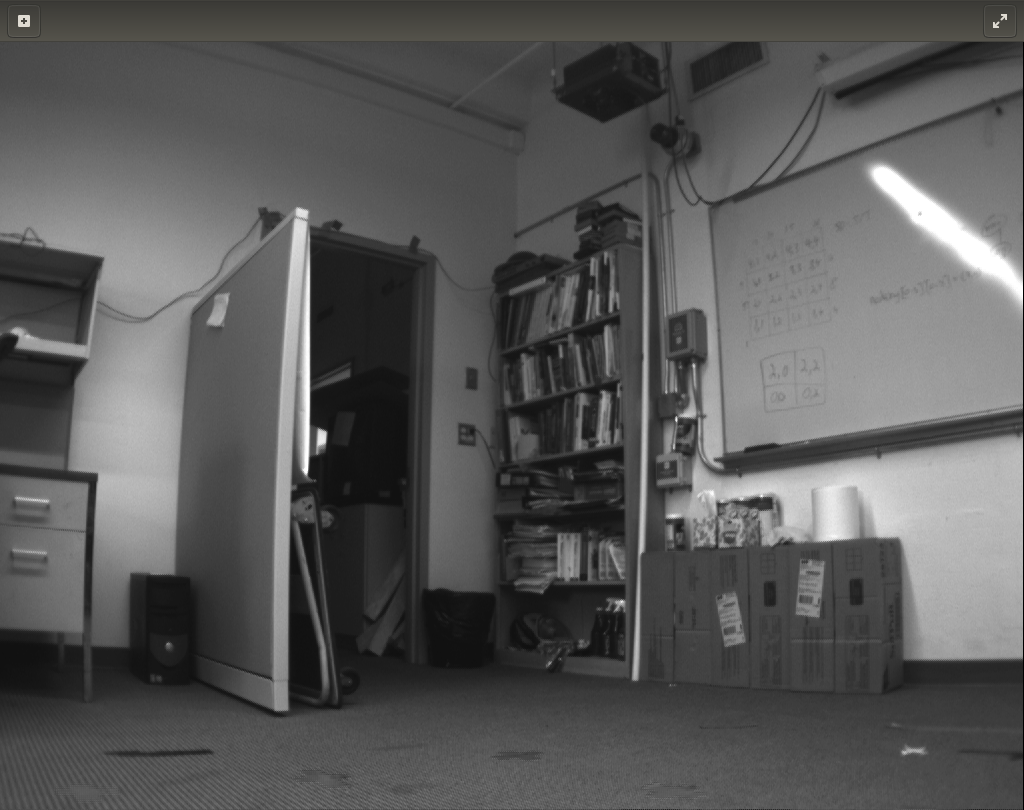

The BB2 Driver uses package camera1394stereo, which System, Robotics, & Vision from the University of the Balearic Islands provided. We modified the sources files within the driver, which previously had issues with the queuing and dequeuing of the buffer ring probably due to the slow processor in the Pioneer 3AT robots we used. We modified it to single shot mode rather than continuous transmission. Using ROS, the program can load image data onto a server from the right and left stereo camera. Systems, Robotics, & Vision's Website: http://srv.uib.es/ SRV's Github: https://github.com/srv How to Run BB2 ROS Driver

- Server

- open terminal, run roscore

- Robot

- cd catkin_ws

- . devel/setup.bash

- cd src/camera1394stereo/launch

- export ROS_MASTER_URI=http://<server/laptop_ip_address>:11311

- export ROS_IP=<robot_ip_address>

- roslaunch stereo_camera.launch

- N.B: if there is an error with the guid, change both <guid>.ymal file name and the parameter in the <guid>.yaml file AND restart roscore

- After setup is complete, on the server (or laptop), run rosrun rviz rviz

- in RViz, click Add

- select Image

- within the Image parameter, change the Image Topic to either /stereo_camera/left/image_raw or /stereo_camera/right/image_raw

- a window should pop up with the received image being displayed from the stereo camera

Permissions

- Persons/group who can view/change the page:

- Set ALLOWTOPICCHANGE = FRCVRoboticsGroup

My Links

- WelcomeGuest - starting points on TWiki

- TWikiUsersGuide - complete TWiki documentation, Quick Start to Reference

- Sandbox - try out TWiki on your own

- BenBarriageSandbox - just for me

My Personal Preferences

- Preference for the editor, default is the WYSIWYG editor. The options are raw, wysiwyg:

- Set EDITMETHOD = wysiwyg

- Fixed pulldown menu-bar, on or off. If off, the menu-bar hides automatically when scrolling.

- Set FIXEDTOPMENU = off

- Show tool-tip topic info on mouse-over of WikiWord links, on or off:

- Set LINKTOOLTIPINFO = off

- More preferences

TWiki has system wide preferences settings defined in TWikiPreferences. You can customize preferences settings to your needs: To overload a system setting, (1) do a "raw view" on TWikiPreferences, (2) copy a

TWiki has system wide preferences settings defined in TWikiPreferences. You can customize preferences settings to your needs: To overload a system setting, (1) do a "raw view" on TWikiPreferences, (2) copy a Set VARIABLE = valuebullet, (3) do a "raw edit" of your user profile page, (4) add the bullet to the bullet list above, and (5) customize the value as needed. Make sure the settings render as real bullets (in "raw edit", a bullet requires 3 or 6 spaces before the asterisk).

Related Topics

- ChangePassword for changing your password

- ChangeEmailAddress for changing your email address

- UserList has a list of other TWiki users

- UserDocumentationCategory is a list of TWiki user documentation

- UserToolsCategory lists all TWiki user tools

Switches have been stacked, but management interface still considers them as two separate switches with the same IP

NOTE: 9/5/2010 Turning on the 2nd Switch causes the blades not to be able to communicate any longer, and the NICs on the machines flash green very rapidly

Labelled the cables in the following way:

ControllerNode: Main server with fiber connection is node "00"

Remaining servers each have 2 ethernet data ports denoted node / 1 and node / 2, where n is the servers 01 to 10

Top Port (eth1) is hotpluggable to an internet connection (X.X.X.142 on our local lab network)

Bottom Port (eth0) is set up as IP 10.10.10.1NODE, so IPs 100-110 are reserved for the Nodes

Both disks appear as one disk called: /dev/cciss/c0d0 to the operating system

SlaveNode Configuration:

Drives are configured as a logical volume via the HP Smart Array P400 RAID controller (Manual), RAID 0 ("fault tolerance")

Not sure what the other configuration settings are--ask Sirhan

Partition table of logical drive---must be connected using the RAID Controller (press F8 to get into it and set up the logical drives)

settings of logical drivess: Bays 1 and 2 or 3 and 4 (paired)

Clonezilla will refer to the drives

p1 1 2432 primary LINUX

p2 2433 7295 primary LINUX

p3 7296 10942 primary LINUX

p4 10943 end EXTENDED

p5 10943 17021 EXTENDED

63 sectors

121

594 cylinders

16065 * 512 = 8225280 bytes

-----

[original defaults read:

32 sectors

239389 cylinders 8160 * 612 = 4177920 bytes] ISSUES:

RAID controller doesn't like swapping of drives for Logical Volumes

NOTE ABOUT LOGICAL DRIVE MANAGEMENT:

If there are 2 logical volumes (4 drives) and bay 3 and 4 are removed, and replaced, the HP Smart Array controller will not detect ANY logical volumes, unless the old one is properly deleted before inserting new disks FAILED ATTEMPT TO CLONE:

/opt/drbl/sbin/ocs-onthefly -g auto -e1 auto -e2 -j2 -v -f sda -t sdb

dd if=/dev/sda of=/tmp/ocx

FRESH INSTALLATION ON A NODE:

1. SET UP THE RAID DISK ARRAY

- At the HP Smart Array P400 Controller Initialization screen, press F8 (it passes quickly so be ready)

2. I Installed the TESTING snapshot of Debian. I use the business card CD, install the base system, and only have the System Utilities and SSH server installed

3. configure /etc/hosts /etc/hostname /etc/network/interfaces appropriately (fill this in)

4. install the following package: openmpi-common openmpi-bin libopenmpi1.3 libopenmpi-dev emacs23-nox and openssh-server

5. I think something needs to be done with SWAP files

Currently Nodes 01-03 and 05-10 are set up with EXT4 + LVM + NFS, but this seems to fail with "scattertime" test (NFS hangs)

Node 04 is configured with ext3 + nfs and no LVM We noticed that ext4 + nfs + LVM was causing problems (the system was hanging), so we have gone back to the original partition table (above) with ext3 partitions. 9/7/2010 We also had an issue with the speed of the ethernet cards. Rather than running at gigabit speed (1000BaseT), it was running at 10BaseT half duplex. This was determined by examining /var/log/messages file or typing "dmesg" immediately after unplugging and replugging the cable back in. A simple reboot solved the problem, but we are unsure what set it to that mode in the first place. -- StephenFox - 2010-08-23

My Links

- WelcomeGuest - starting points on TWiki

- TWikiUsersGuide - complete TWiki documentation, Quick Start to Reference

- Sandbox - try out TWiki on your own

- BrunoVieiroSandbox - just for me

My Personal Preferences

- Preference for the editor, default is the WYSIWYG editor. The options are raw, wysiwyg:

- Set EDITMETHOD = wysiwyg

- Fixed pulldown menu-bar, on or off. If off, the menu-bar hides automatically when scrolling.

- Set FIXEDTOPMENU = off

- Show tool-tip topic info on mouse-over of WikiWord links, on or off:

- Set LINKTOOLTIPINFO = off

- More preferences

TWiki has system wide preferences settings defined in TWikiPreferences. You can customize preferences settings to your needs: To overload a system setting, (1) do a "raw view" on TWikiPreferences, (2) copy a

TWiki has system wide preferences settings defined in TWikiPreferences. You can customize preferences settings to your needs: To overload a system setting, (1) do a "raw view" on TWikiPreferences, (2) copy a Set VARIABLE = valuebullet, (3) do a "raw edit" of your user profile page, (4) add the bullet to the bullet list above, and (5) customize the value as needed. Make sure the settings render as real bullets (in "raw edit", a bullet requires 3 or 6 spaces before the asterisk).

Related Topics

- ChangePassword for changing your password

- ChangeEmailAddress for changing your email address

- UserList has a list of other TWiki users

- UserDocumentationCategory is a list of TWiki user documentation

- UserToolsCategory lists all TWiki user tools

TWikiGroups » CISDeptGroup

Use this group for access control of webs and topics.- Member list:

- Set GROUP = DamianLyons, RobertMoniot, ArtWerschulz, PhilipAmanqwaDanquah

- Persons/group who can change the list:

- Set ALLOWTOPICCHANGE = CISDeptGroup

Introduction to the Computing Facilities

of the Department of Computer and Information Sciences

at Fordham University

- Overview

- Computing facilities

- Logging in on Linux

- Logging in on Windows

- Backups: recovering lost files

- How to submit programming assignments

- Disk quotas

- File transfer

- Sending and reading email in Linux

- Using CTMC to describe the time steps of robot move

- Pick an expected value of initial time frame

- Reduce time for each step with decrease distance from Goal

-- (c) Fordham University Robotics and Computer Vision

Notes on filter_threshold

- Must have OpenCV 2.0 or a newer version installed.

- Result image is stored in a folder (locateed in same folder as the source) called test. Please create this folder before running the program.

- Adjust threshold values accordingly to filter the correct amount

- Also, adjust the erode parameters accordingly.

- Change threshold value accordingly: program will truncate everything in the image received from webcam except areas with threshold values between threshvalue and maxthresh.

- Source: filter_threshold.cpp: filter_threshold.cpp

- Makefile: Makefile: (works on ubuntu 11.10 and later versions.. for previous versions, replace LDLIBS with LDFLAGS).

-- PremNirmal - 2011-08-23

-- PremNirmal - 2011-08-23

ÿ

If we relax the assumption that cmap is bijective, then an input operation may have its port connected to several output ports. Dynamic fan-in and fan-out on ports is restricted to degree 1: this means that if an input port is connected to q output ports, this is just the same as q separate communication operations in sequence; if an output port is connected to q input ports, this is the same as q separate communication operations in sequence. Let (fan-out) FO(j,k) = { (i, a ) : cmap(i,a) =(j, port(j,k)) } and Let (fan-in) FI((i,k) = { (j, b ): cmap(i, port(i,k)) =(j,b) } where port(i,k) is the port used in operation IOi,k Case 2: cmap is irreflexive, respects processes, connections PS and is invertable with dynamic fan-in/out restricted to 1. For all processes i, IOi,top(i) there is at most one other process j, and IOj,top(j) such that cmap(i,port(top(i)))=(j,port(top(j))). Let fi(IOi,k) be the degree, number of different (j,b) for which cmap(i,port(i,k))= (j,b) Where port(i,k) is the port used in operation IOi,k Step 4 is modified since more than one (j, b ) could exist, however, with the assumption of dynamic fan-in/our restricted to degree one, there is at most one that is top(j) for some process j. The total number of IO operations is still n*m; however, on each operation we may need to search a fan-on FO(j,k) or fan-out FI(i,k) set. Since the largest that each such set could be is the maximum number of input or number of output ports, P = max(|IN|,|OU|), the worst case complexity would be P_*( _n*m/2).ÿ

If we relax the restriction on dynamic fan-in/out, then each time we encounter an operation in process i, with IOi,top(i) there will be in the worst case FI(i,top(i)) (and equivalently for fan-out) cases to consider. The problem is that selecting one of these cases may result in later being unable to match step 5 in the algorithm; therefore, all the different choices need to be explored to see if at least one allows all IO operations to be matched. Case 3: cmap is irreflexive, respects processes, connections PS and is invertable with no dynamic fan-in/out restriction, and all orderings of communications must be examined to determine if a) any order exists in which all IO operations are matched or b) amy order exists in which all IO operations are not matched. The maximum size of an FI or FO set is P and in the worst case all m IO operations will have this number of choices, resulting in an exponential complexity P(n*m)/2.IO operations composed with Parallel-min and Parallel-max compositions impose additional constraints on the IO matching process: Parallel-max: all the operations in composition must be matched, but they can be matched in any order. Parallel-min: any one operation in the composition can be matched, but not more than one. Case 4: cmap is irreflexive, respects processes, connections PS and is invertable with dynamic fan-in/out restriction of 1, and including parallel-min compositions. Parallel-min presents a choice at step 4 in the same manner that FI or FO would. However, in this case, as long as there is any match for step 5, it is sufficient. Since the number of choices available in the Parallel-min composition is still at most P, the complexity is not changed beyond P_*( _n*m/2). In fact, because the parallel-min includes P of the m IO operations, and because only one of these needs to be considered, it reduces the complexity to P*(n*(m-P))/2= P*(n*m/2) – P2*n/2.

Case 5: cmap is irreflexive, respects processes, connections PS and is invertable with dynamic fan-in/out restriction of 1, and including parallel-max compositions. Parallel-max also presents a choice at step 4 in the same manner that FI or FO would. However, all the m operations still need to be carried out, so there is no reduction in complexity as seen above. <meta name="robots" content="noindex" /> -- (c) Fordham University Robotics and Computer Vision

By 7th of June: Complete a working sample of sparse array code based on the proposal from last week. Will not implement all the features, but will storage of spare data using the proposed method. By 14th of June: Complete a working function of code that implements the full requirements specified in week1, including the ability to report and log the data structure size as sparse data is added. By 21st of June: Test the code and fix errors Integrate the code with the TimeDemo code for the information fusion display, and run the existing TimeDemo fusion algorithms showing data usage. By 28th of June: Continue to integrate the code with the TimeDemo code for the information fusion display, and run the existing TimeDemo fusion algorithms showing data usage and collection additional much larger data sets. By 5th of July: Fix all that needs to be fixed again.

- Requirements for a 3D sparse array data type: bullet list.

- Whether each of the STL container types would be useful for representing this (pros and cons maybe).

- Anything else you have found on sparse array representation that you want to share.

- Finishes with a proposal for how to proceed next week (ie base on a STL container class, build from scratch, using existing package from the web etc).

The program will be used to store the data the robots gather as coordinates. The robot goes through space and maps the surroundings, but most of those surroundings are empty space. A room for example is composed of 4 walls and some items but the rest is empty space. Storing the data for the empty space take a lot of unnecessary memory. That’s why we’re using a 3-dimensional sparse array to store the data, so that all the unregistered space doesn’t take up memory. Which STL container types would be useful for representing this? There are many container types that are not suited for the job because they focus more on insertion and extraction of data or have limited inserting options: Bitset, deque, list, queue, set, stack. The containers that would be suited are Array, Vector and Map. But since Array is defined memory and we don't know how much memory we will need, it is better to use Vectors. But Map is an even better option and we're considering using it as the container type for this program. -- (c) Fordham University Robotics and Computer Vision

COMPLETED

1. SPIE Defense and Security Symposium 2012, April 23-27 Baltimore MD; Abstract due Oct 10th 2011. Abstract Submitted. Accepted. Paper Submitted. Minor formatting request and resubmit. Presentation Made. 2. PerMIS'12 Performance Metrics for Intelligent Systems, March 20-22, 2102, College Pk. MD; Paper due date Nov 14th 2011, (http://www.nist.gov/el/isd/permis2012.cfm- Table of Contents of 2008 PerMIS Workshop

. Includes all proceedings as pdf files.

. Includes all proceedings as pdf files.

- Table of Contents of 2009 PerMIS Workshop

. Includes all proceedings as pdf files.

. Includes all proceedings as pdf files.

- 2010 PerMIS Workshop Schedule and proceedings

. Does not include full proceedings.

. Does not include full proceedings.

IN PROGRESS

* IROS 2016 Deajeon Korea. Oct 9-14 2016. http://www.iros2016.org/FUTURE VENUES

* The 10th International Conference on Intelligent Unmanned Systems (ICIUS 2014) September 29 - October 1, 2014, Montreal, Quebec, Canada. *Deadline 5/1 * 23rd INTERNATIONAL CONFERENCE ON SYSTEMS ENGINEERING - ICSEng 2014 Las Vegas, USA, August 19-21, 2014. (http://www.icseng.com2011 -- (http://www.cs.utah.edu/events/conferences/cav2011

Page Permissions:

- Set ALLOWTOPICCHANGE = FRCVRoboticsGroup

12/19 10:00a, PI teleconf

JANUARY 2013 1/3 10:00am Group Teleconf

1/18 3pm DTRA PI Teleconf

1/31 1pm DTRA Group Teleconf *** RESCHEDULED

FEBRUARY 2/7 1pm EST DTRA Group Teleconf (Prem to join from CA 10amPST)

2/21 2pm DTRA PI

MARCH 3/7 2pm DTRA Group

Fordham visit to GATech, @CSER

Our presentation, evening 3/20;

Joint Project meeting 3/21 APRIL 4/3 11am DTRA PI

4/17 11am DTRA Group

MAY 5/1 DTRA PI

5/14 11am DTRA Group

5/28 4pm DTRA PI

JUNE 6/12 10am DTRA Group

6/25 1-3pm DTRA Webinar

JULY 7/8 1pm DTRA PI (Moved from 7/2 10am)

7/16 10am DTRA Group

Annual DTRA review, Arlington VA, 7/22-26

Congrats to James and Dagan for winning best Poster Award!!! AUGUST 8/13,14: Presentation to OSD PSC standing subcommittee on T&E, V&V

8/22,23: Presentations to United Technology Research Center

8/29 2pm DTRA Group

SEPTEMBER 9/11 DTRA PI 10am.

9/24 DTRA Group 1pm

OCTOBER 10/3 GT team arrives in evening, dinner in NYC.

10/4 Joint meeting in LC campus, finish mid afternoon.

10/14 DTRA PI 4pm.

SSRR 10/22-28

10/30 DTRA Group 11am.

NOVEMBER IROS 11/2-8

DECEMBER <meta name="robots" content="noindex" /> -- (c) Fordham University Robotics and Computer Vision

My Links

- WelcomeGuest - starting points on TWiki

- TWikiUsersGuide - complete TWiki documentation, Quick Start to Reference

- Sandbox - try out TWiki on your own

- DamianLyonsSandbox - just for me

My Personal Preferences

- Preference for the editor, default is the WYSIWYG editor. The options are raw, wysiwyg:

- Set EDITMETHOD = wysiwyg

- Fixed pulldown menu-bar, on or off. If off, the menu-bar hides automatically when scrolling.

- Set FIXEDTOPMENU = off

- Show tool-tip topic info on mouse-over of WikiWord links, on or off:

- Set LINKTOOLTIPINFO = off

- More preferences

TWiki has system wide preferences settings defined in TWikiPreferences. You can customize preferences settings to your needs: To overload a system setting, (1) do a "raw view" on TWikiPreferences, (2) copy a

TWiki has system wide preferences settings defined in TWikiPreferences. You can customize preferences settings to your needs: To overload a system setting, (1) do a "raw view" on TWikiPreferences, (2) copy a Set VARIABLE = valuebullet, (3) do a "raw edit" of your user profile page, (4) add the bullet to the bullet list above, and (5) customize the value as needed. Make sure the settings render as real bullets (in "raw edit", a bullet requires 3 or 6 spaces before the asterisk).

Related Topics

- ChangePassword for changing your password

- ChangeEmailAddress for changing your email address

- UserList has a list of other TWiki users

- UserDocumentationCategory is a list of TWiki user documentation

- UserToolsCategory lists all TWiki user tools

- Comment:

- My home page

- My Main activities

- Registration - become a <nop>TWiki user

- TWikiUsers - list of users of this <nop>TWiki site

- TWikiGroups - list of groups used for access control

%WLPARENT% Watchlist

Recent Changes

| Topic | Last Update |

|---|---|

| FRCVDataRepositoryHSVD2 in Main web | 2018-11-15 - 22:54 - r5 - DamianLyons |

Watched Topics

- To unwatch multiple topics, uncheck the topics, then click Update Watchlist.

- To watch all topics in a web, check the All checkbox, then click Update Watchlist.

- To have new topics added to your watchlist automatically, check the New checkbox, then click Update Watchlist.

- To add a topic to the list, visit it and click Watch on the menu bar.

Preferences

- Began software update for the robot, desktop and laptop

- Issued laptop 10 for testing, however, not all of the executables are currently working

- Bought hardware for the kinect

Adding a new n-ary operator, ie a function, to matheval

Example, lets add the 2-ary function tss_blah(a,b). Step 1: Edit scanner.l and add |tss_blah to the of the constants list. The '|' is the flex operator for OR, since constants is a list of possible names and it must separate the names. Step 2: Edit symbol_tableV.c and add "tss_blah" to the intialized list of names functions_2V_names tss_blah to the initialized list of functional pointers functions_2V (If you waated new 3-ary functions, add to functions_3V_names etc) Step 3: Put in an external declaration for the function in dlibinterface.h extern void *tss_blah(void *arg1, void *arg2) functions take and return data as void *, because there are many different types. They need to be cast at some point to the right type. You can directly add a C function to xmathV.c and xmathV.h if you want a local function. This is good for functions that calculate simplile stuff. However, if you need MOGS, c++ etc, then go to the nextstep Step 4: Only do this if you did not do a local function in xmathV.c, add to xmathV.h as well Make a function in dlibinterface.cpp that does the work you need done extern "C" void *tss_blah(void *a1, void *a2) { stuff in here } Step 5: Probably don't need to do this. It is the assumption that functions return reals. If you don't return a type real, then you may need to have the type of the return figured out. This is done in evaluate node in nodeV.c, under the 'f' switch case. For 1-ary and 2-ary functions, real is the only currently implementd case. For 3-ary functions, the type of the argumentsis used to decide on the type of the result. tss_condD(takes a real, a mog and a mog and returns a mog. tss_pdiff takes a mog, a mog and a real and returns a real. For 3-ary functions the variable argtypes_flag is used as a binary flag to remember the types of the arguments. Files change checklist: scanner.l, symbol_tableV.c, symbol_tableV.h, dlibinterface.h, dlibinterface.cpp, xmathV.c, xmathV.h <meta name="robots" content="noindex" /> -- (c) Fordham University Robotics and Computer VisionBackground

We considere a scenario where an autonomous platform that is searching an area for a target may observe unstable masonry or may need to travel over, by or through unstable rubble. One approach to allow the robot to safely navigate this challenge is to provide a general set of reactive behaviors that produce reasonable behavior under these uncertain and dynamic conditions. However, this approach may also produce behavior that works against the robot’s long-term goals, e.g., taking the quickest or safest route to a disaster victim or out of the building. In our work we investigate combining a behaviour-based approach with the Cognitive Robotics paradigm of rehearsal to produce a hybrid reactive-deliberative approach for this kind of scenario.Test World

A 15 room building was designed so as to present space for the robot to be confronted with ‘challenges’ and be able to respond to the challenges by either continuing a traverse through the building or selecting an alternate path. Figure 7(a) shows the simulation model of the building from above. The entrance and exit doorways are on the bottom right and left. There are several large rooms with multiple doors which are the areas in which the robot can respond to challenges. There are also a number of smaller rooms which offer alternate routes through the building. Figure 7(b) shows the main waypoints (solid arrows) and alternate routes (dashed arrows). This information is stored in the waypoint schema.Videos!

- Small World Example: You will see the 'imagination' simulation screen on the upper left and the 'real' world on the bottom. There is a text screen on the right - you don't need to read that, its all diagnostics etc. In this example, you will see the robot navigate through the real world, its imagination following along, until it seems something unexpected - a block about to fall to block a door. You will see it being created in imagination, its effect simulated (and the 'real' world is stopped while this is shown to you -- otherwise it would be too fast to see), and detour taken because of the effect. The red sphere that appears in the 'imagination' marks where the robot is intending to move. This red marker makes it possible to do image processing to determine if a location is blocked or not (rather than 3D or sym bolic reasoning; faster!). cognitiveonly_smallbldg_1challenge.wmv.

- Reactive Only Example: You will see the real world on the top and the imagination on the bottom (no diagnostic screen for these longer runs). The aspect ratio is distorted, sorry. You will see the robot dash through the building and as it notices each falling block it tries to dodge it (using the Arkin (1998) potentiel field approach). Mostly it succeeds, but it depends on the size, shape and speed of the blocks of course - all randomly generated in these tests. In this run it makes it all the way to then end, and then gets clobbered! reactiveonly_4c_fail4.wmv.

- Cognitive Only Example: Different display again! The real world is bottom left; imagination bottom right and the diagnostic display is on the top. The comparison between real and expected scenes happens at every point, and when the falling block challenges are detected, you will see them recreated in simulation and their effect predicted. Of course it makes it to the end every time. cognitiveonly_text_4cs.wmv.

- Set ALLOWTOPICCHANGE = FRCVRoboticsGroup

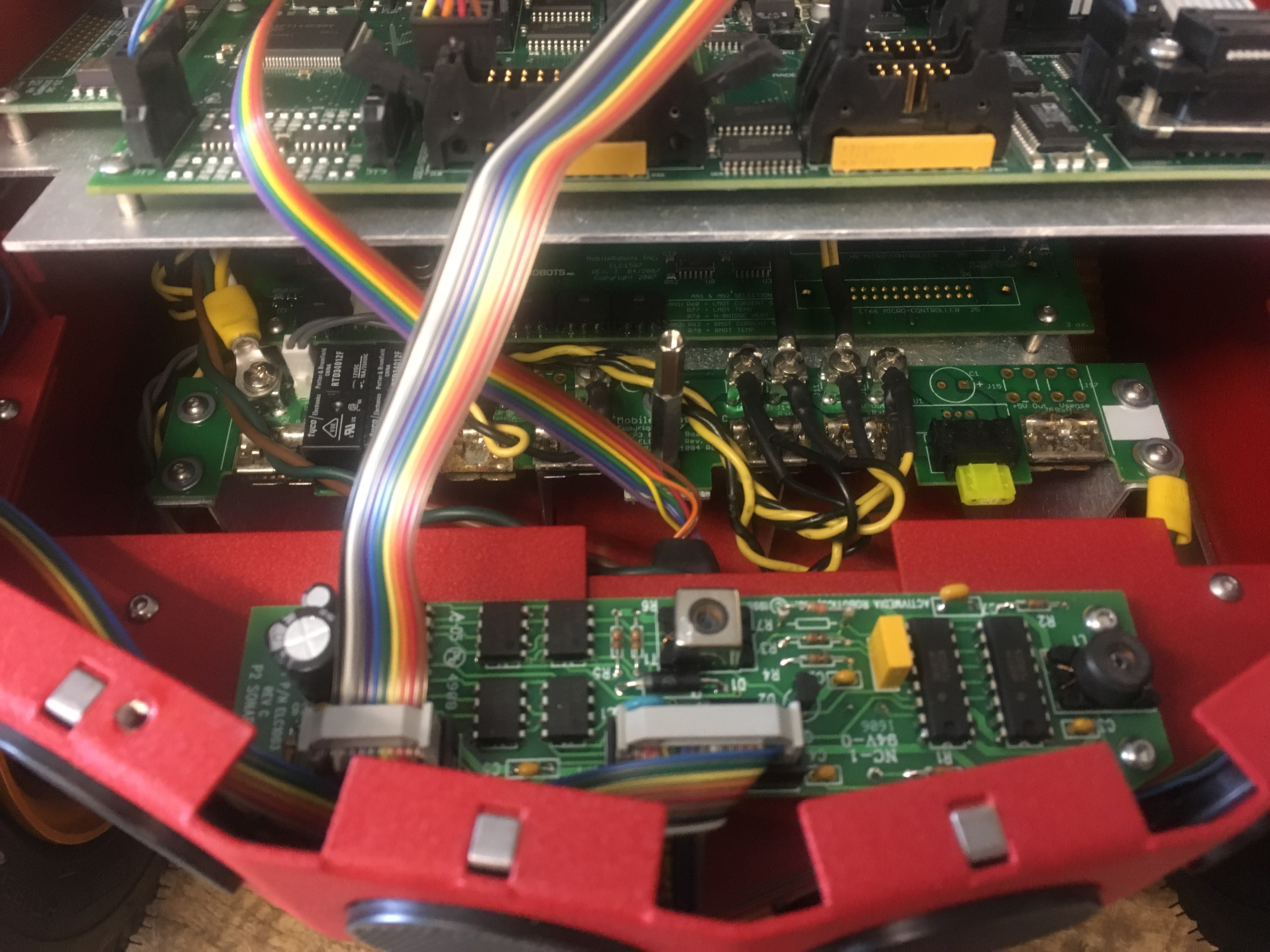

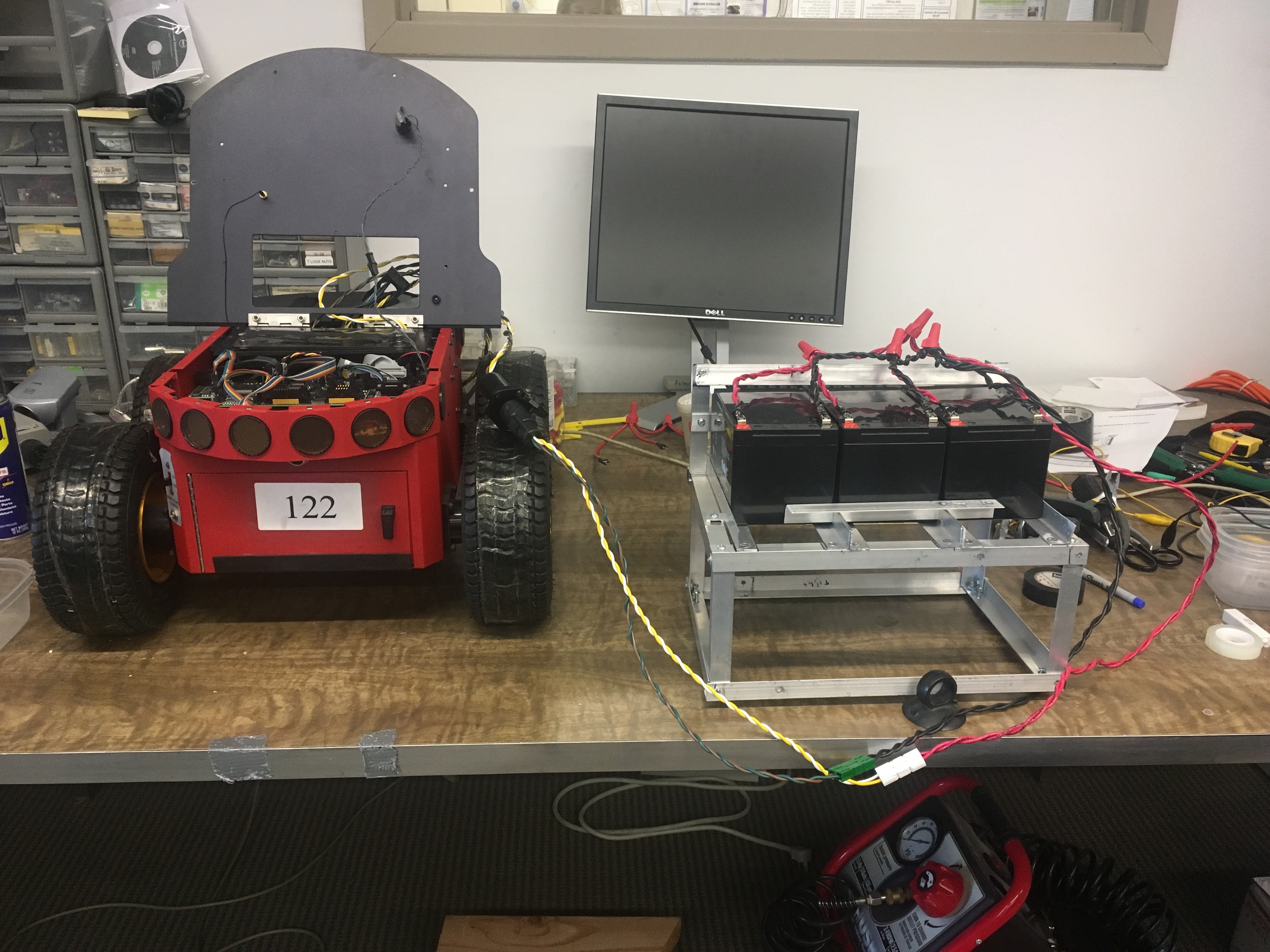

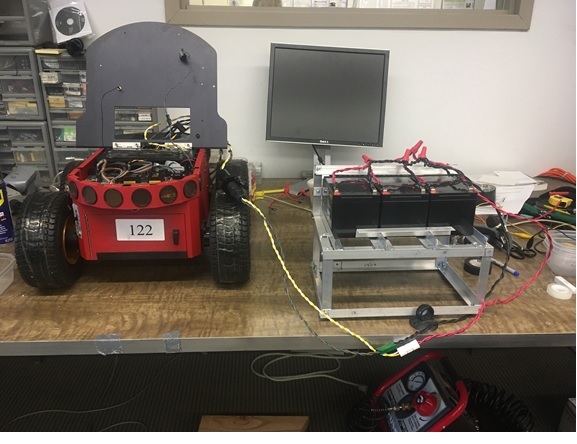

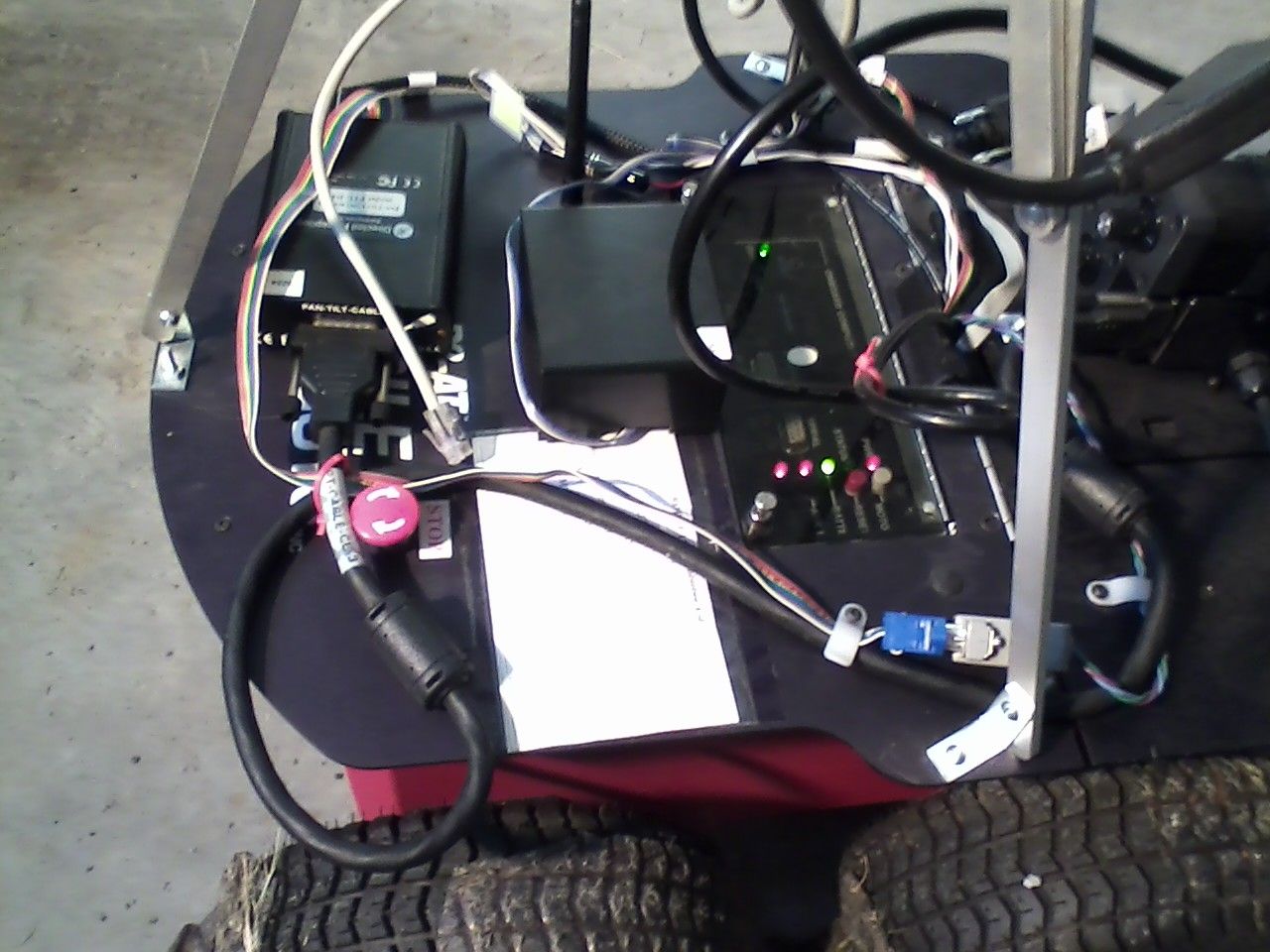

Battery Booster Pack for Mobile Robots P3-AT

Intro

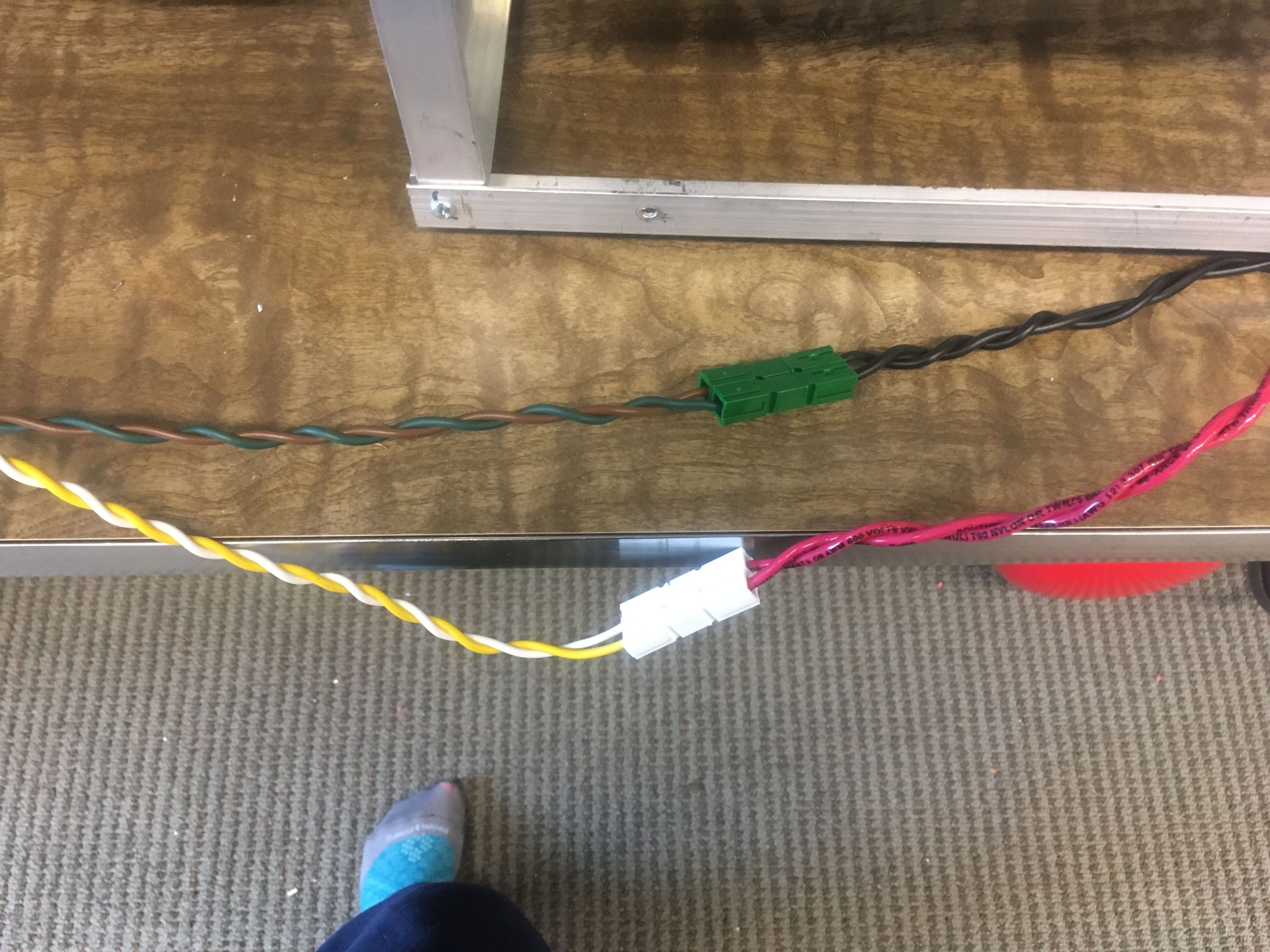

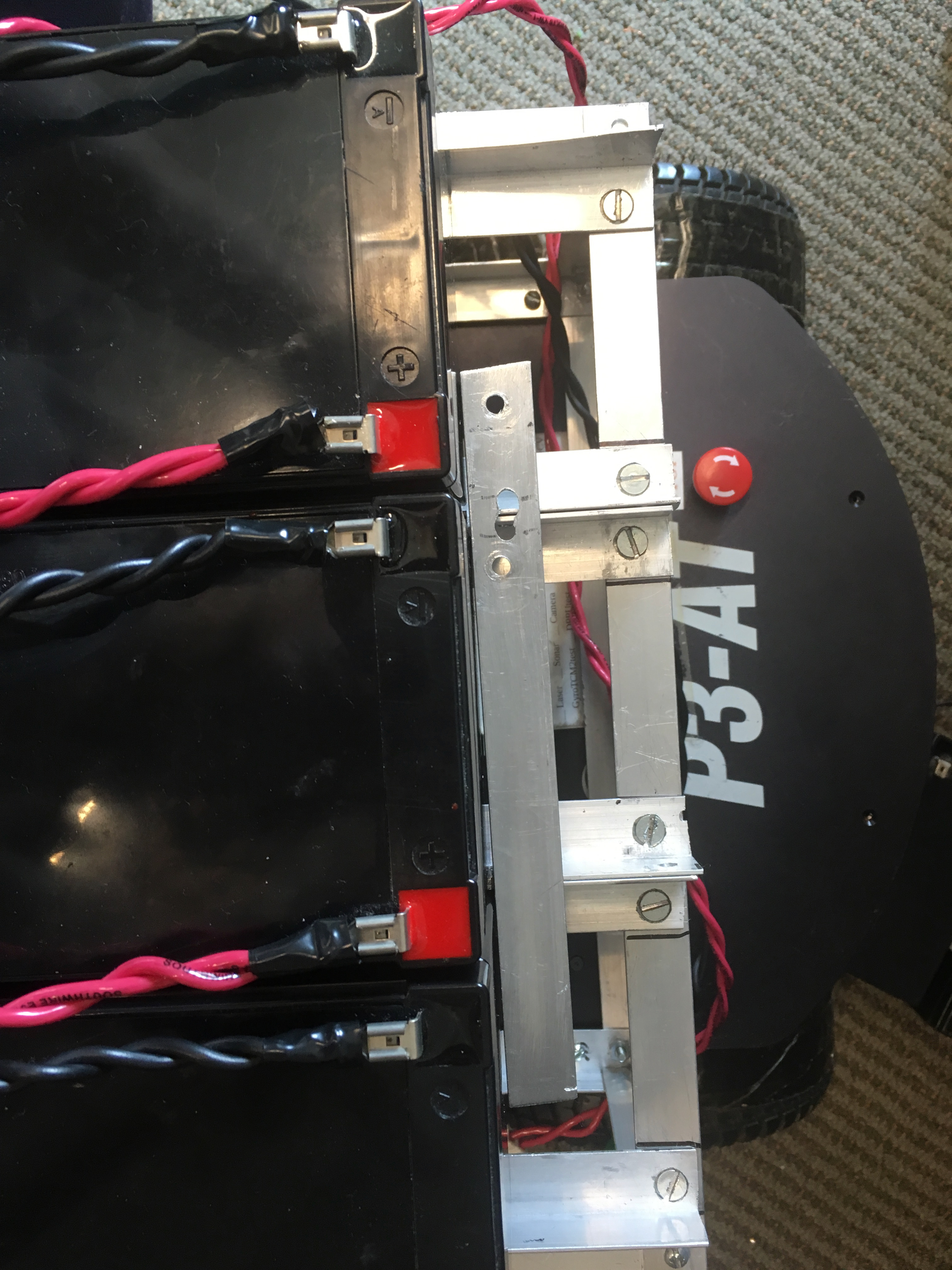

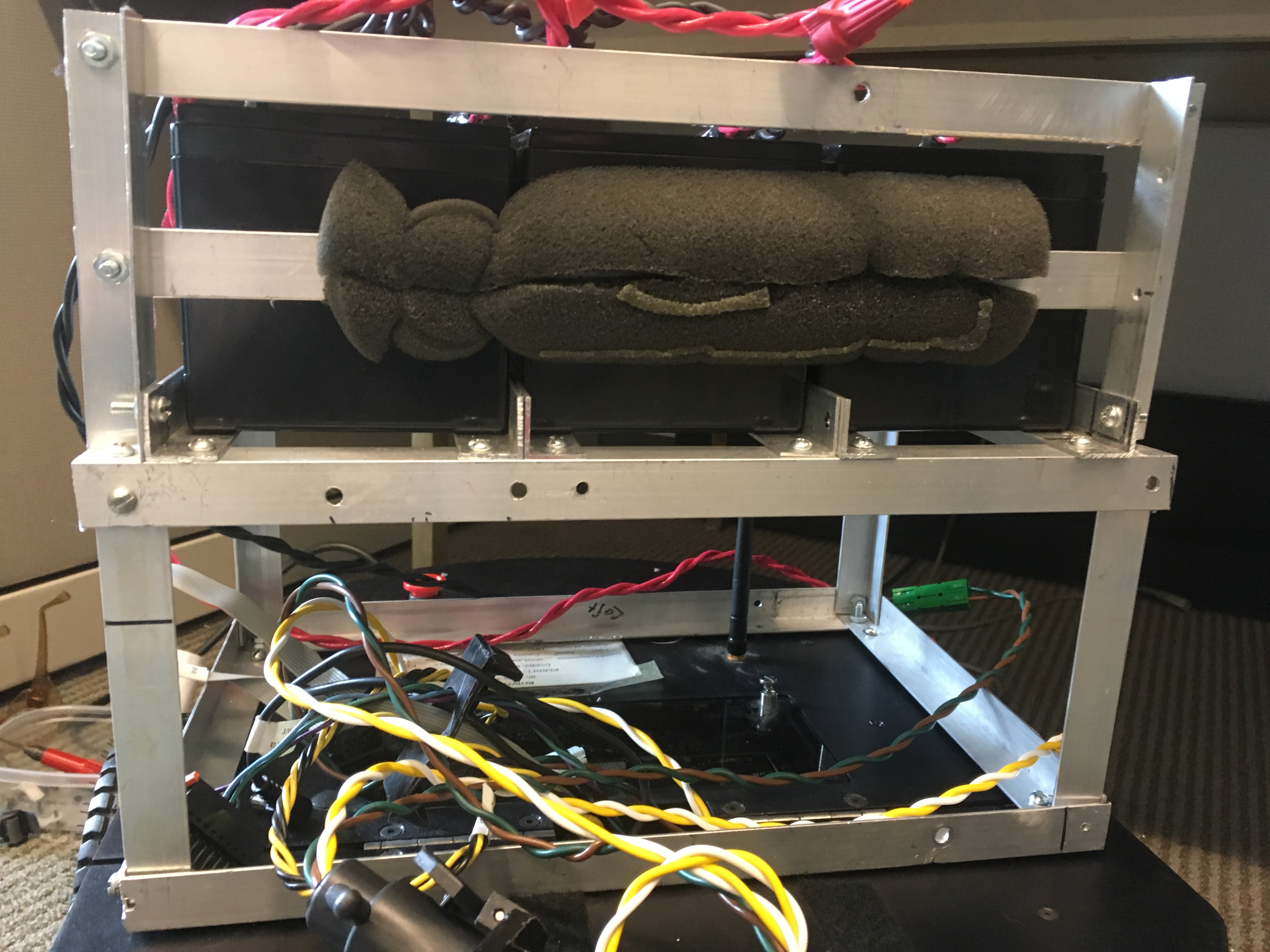

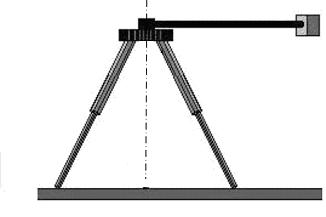

This project started as a way to support the need for more power longevity during testing and as a way to utilize batteries several sizes too large that were accidently ordered. The batteries used for this pack were three 12V 12AH Lead Acid Casil Batteries about 4” by 6”. While these batteries were several inches too large to fit in to the robot itself, the voltage and current were compatible enough that they could easily be added in parallel to the standard batteries used.The Mount

In order for the batteries to be easily added to the bot without interfering with functionality or taking up the valuable real estate the top of the bot offers, a mount had to be constructed. For this mount we decided to use angle iron as the main material and nuts and bolts as the fasteners. These materials were chosen because of the ease with which they can be used for construction and the strength they'll provide in the face of the somewhat weighty batteries. The dimensions of the base are: 13.5" wide x 9.25" long x 5.5" tall. The battery rails are 7.25” long and 4”apart with a flat bar attached underneath the rails for extra support.

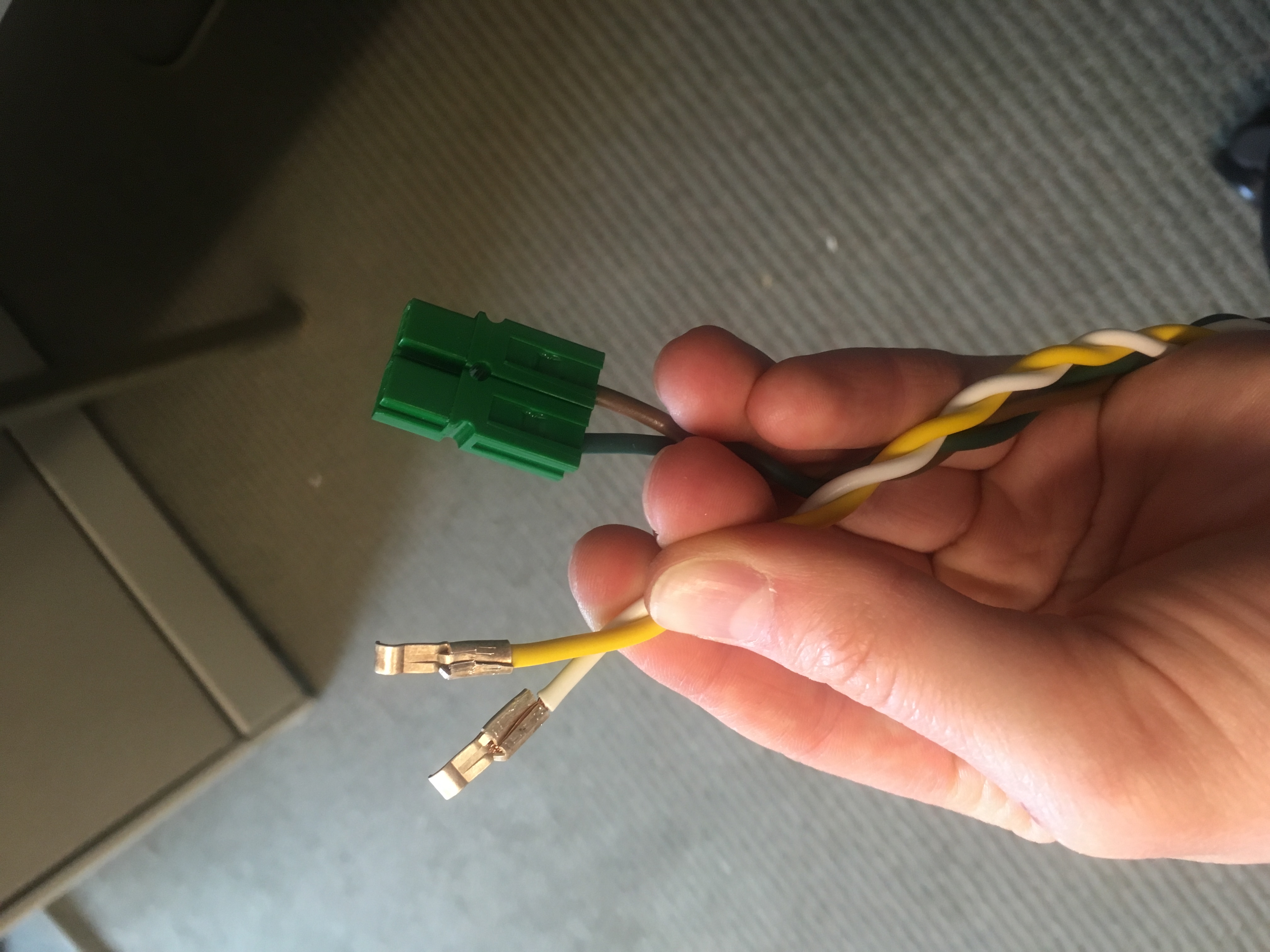

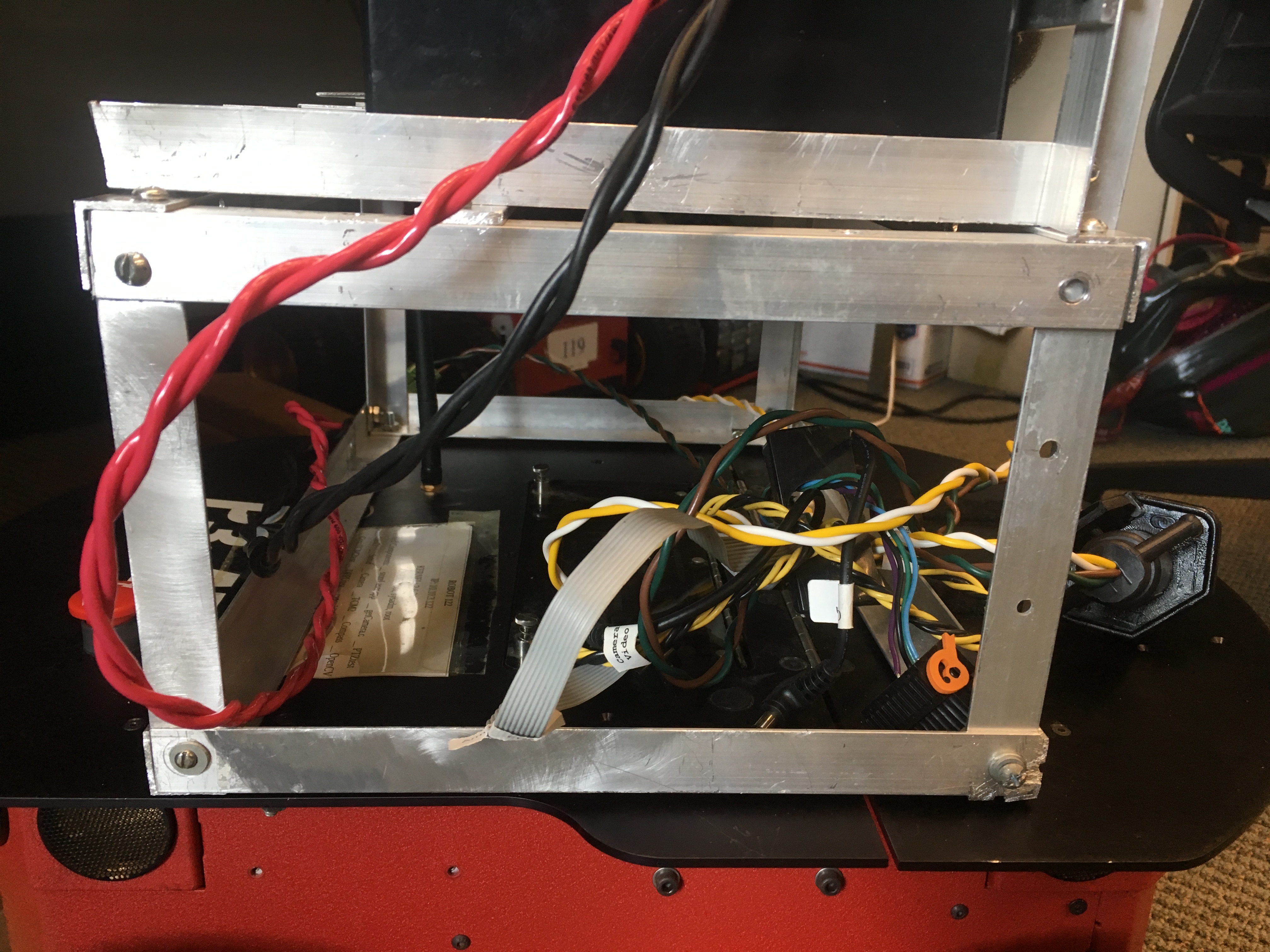

The Wiring

We cut the wires of the RV connector in the middle and connected the wires on the male end to the loose ends of the batteries’ wires with powerpole stackable connectors.

We cut the wires of the RV connector in the middle and connected the wires on the male end to the loose ends of the batteries’ wires with powerpole stackable connectors.

In order to connect the wires stemming from the female end to the bot itself we bared the wires and crimped those on to ring tongue connectors which we then screwed in to a positive (pictured left) and negative (pictured right) connection on the robot’s battery strip.

In order to connect the wires stemming from the female end to the bot itself we bared the wires and crimped those on to ring tongue connectors which we then screwed in to a positive (pictured left) and negative (pictured right) connection on the robot’s battery strip.

The Entire Setup

Testing

This booster pack more than doubled the life span of the robot during two phases of testing. The first phase of testing consisted of infinitly looping through turns and direction changing. The robot was rasied on a box so that the teh wheels weer of the ground. Its left and right wheels moves in opposing directions for a set period of time and then changed directions until the battery was drained. Un-boosted the battery lasted about 4 hours, with the boosted the batteries lasted about 8 hours. There is some room for error as the the the length of the boosted bot's test run required it to be stopped, turned off, and restarted during lab occupancy intervals. The second phase of testing involved running a bot without the pack and bot with the booster pack in demo mode in an enclosed psace running object avoidance using the laser and sonar. The un boosted lasted roughly 2 hours while the boosted bot lasted roughly 4.5 hours. Video of testing can be found in the included attatchments below labeled: "Testing_of_Booster_Pack"Permissions

Persons/group who can view/change the page: REMOVE the first line to allow this topic to be seen by all- Set ALLOWTOPICCHANGE = FRCVRoboticsGroup

The FRCV Battery Booster for Pioneer 3AT Robots

This project started as a way to support the need for more power longevity during testing with the Pioneer 3AT robots. They are equipped with 3 12V batteries which on full charge will power about 60 to 90 minutes of robot activity at most. The 'add on' batteries used for this pack were three 12V 12AH Lead Acid Casil Batteries about 4” by 6”. While these batteries were several inches too large to fit in to the robot itself, the voltage and current were compatible enough that they could easily be added in parallel to the standard batteries used. And they had a higher AH rating than the usual 12V robot batteries (7 to 9 AH). In order for the batteries to be easily added to the bot without interfering with functionality or taking up the valuable real estate the top of the bot offers, a mount had to be constructed. The connector we used between the batteries and the bot itself was a standard RV connector -- allowing for a quick connect/disconnect.

Permissions

- Persons/group who can view/change the page

- Set ALLOWTOPICCHANGE = FRCVRoboticsGroup

Stereo Server and Client

The stereoServer code is based on the Aria serverDemo/clientDemo code. Changes the the basic Aria code include 1. use of joystick is supported inclient demo. 2. multiple robots can be used 3. There is a single command to trigger a multi-pan or multi-tilt stereo scanClient code

The client code was modified from the Aria clientDemo in /usr/local/Aria/Arnetworking/examples/clientDemo.cpp The easiest way to compile this is to rename the original clientDemo.cpp and substitute this one, then use the makefile in /usr/local/Aria/Arnetworking/examples/ to make the executable. There are some different versions of this program. The principle difference is whether they support the multiple robot interface commands. Version 1.0 was an edit of the Aria clientDemo to support moving the robot with the joystick, and to send doStereoScan server command. To work correctly that needs to be connected to the stereoServer program, not the (Aria) serverDemo program. serverDemo will run okay under joystick control, but will ignore the new commands such as doStereoScan. Version 2.0 was modified to allow control of the DPPU pan and tilt angles during a scan by sending the doStereoPan and doStereoTilt and also to allow connection to multiple robots (instances of serverDemo or stereoServer). Commands were added to allow robot moves to be directed to each robot or broadcast to all robots. Because of network delays, broadasting to all robots does not mean they all move the same unfortunately. Version 3.0 added in support for control of the visual saliency architecture and automatic selection and data gathering motions for likely landmarks. This has to be run with the correct version of stereoServer to run the commands on the robot. Here is the joystick button mapping for V3.0 b1 - trigger, has to be pressed for motion to occur. b2 - do a stereoscan. b3 - broadcast robot commands. b4 - send to robot 0 only. b5 - send to robot 1 only. b8 - enable the saliency architecure on the next stereoscan. b9 - allow the robot to carry out an automatic saliency move. Here is the source of the latest version (v3):Server code

The server code is a heavily modified version of the serverDemo.cpp program in /usr/local/Aria/Arnetworking/examples. You need a new makefile to make it, you can't use the original from Aria, because of the dependence on the stereo camera (which is captured in the source file stereoCamera.[cpp,h]). There have been several version of the server code. The logfile that the server writes has the version number on the top. Version 1.0 was the modified (Aria) serverDemo.cpp program with support for the new server command doStereoScan. Version 2.0 added support for the doStereoPan and doStereoTilt (which had arguments, so more difficult) server commands. There output format was changed to write GPS data. But the GPS support code was probably not correct. Version 2.1. This version has support for older Aria that does not support GPS, and using serialGPS.cpp it writes GPS (north/west) and TCM2 (roll/pitch/compas/temp) data to the logfile for each stop. Version 2.2 has support for the saliency architecture, identifying salient landmarks and plotting either a confined space (corridors) or open space (room or outside) set of saliency actions to gather the landmark data. In addition to the stop log, there is now also a landmark log and a separate set of landmark datasets. If you are only interested in landmarks, you can just copy the landmark datasets, images and log. Here are the main sources file and the Makfile for the latest version:- stereoCamera.cpp: stereoCamera.cpp; interface to the BumbleBee

- stereoCamera.h: stereoCamera.h

- stereoServer.cpp: stereoServer.cpp

- checkSalience.cpp: checkSaliency.cpp; implements the saliency architecture

- landmarkList.h:landmarkList.h; support for manipulating lists of landmarks

- serialGPS.cpp: Read the GPS directly using serial port (because old Aria did not do this).

- Makefile: Makefile

register.cpp Notes and Instructions

Executable takes the log file as a command line argument. Assumes that PCL- Change the header in the pcd files from "rgb" to "rgba" (or modify rcv2pcd and reconvert your rcv point cloud files to pcd files with that header).

- Change the "PointXYZ" data type in register.cpp to "PointXYZRGBA"

- register.cpp

- CMakeLists.txt

- FindPCL.cmake

- Within that directory: mkdir build cd build cmake .. make register

- You need to convert the rcv point clouds to pcd. I downloaded all the rcv point clouds into a folder called "pointclouds_rcv". Using the bash script, I just tack on a ".pcd" to the txt filenames for simplicity, and the version of rcv2pcd I posted takes input and output filenames (I think I didn't change anything). Here's the bash script I used:

for i in pointclouds_rcv/* do

./rcv2pcd $i $i.pcd

done - Then move the log file and the executable into the folder with the pcd files. I had some issues with the 14th set of scans, so I just deleted them from the log file.

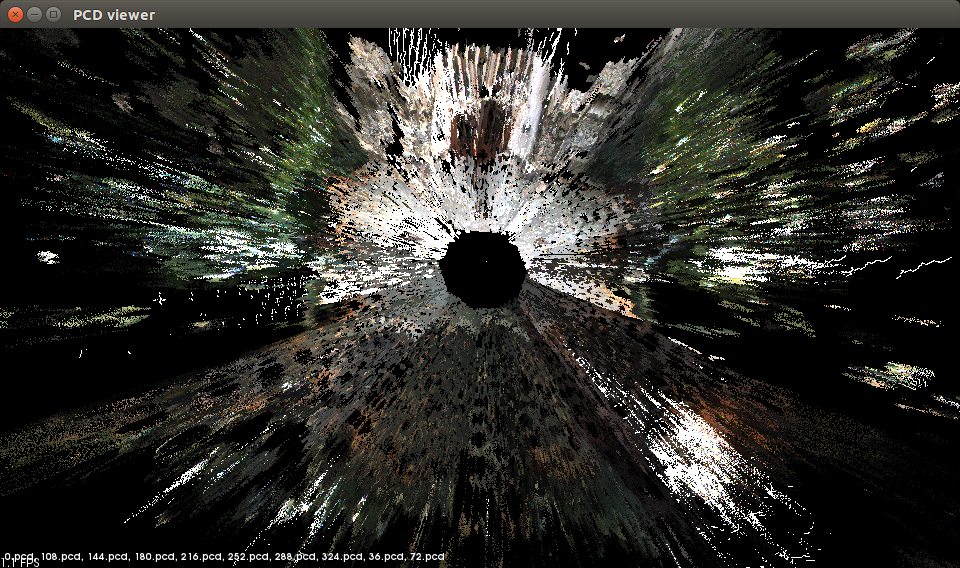

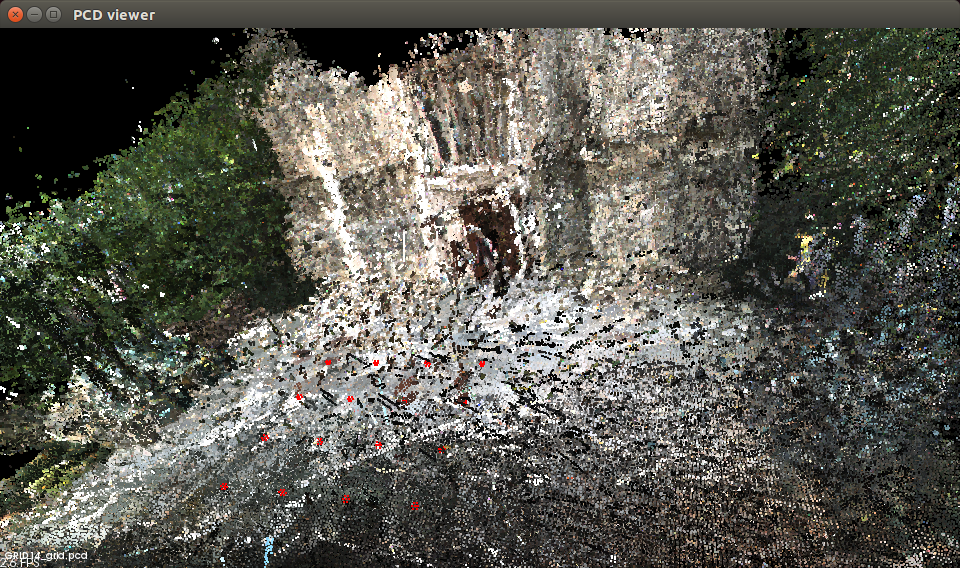

- Finally, it outputs to hallway.pcd. You can view this by: pcd_viewer hallway.pcd.

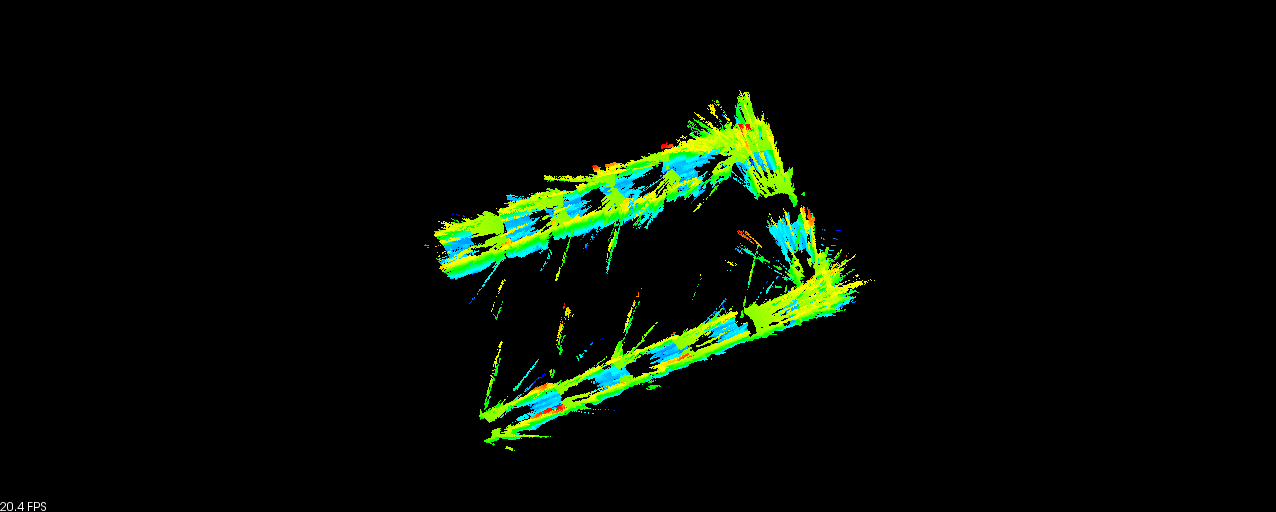

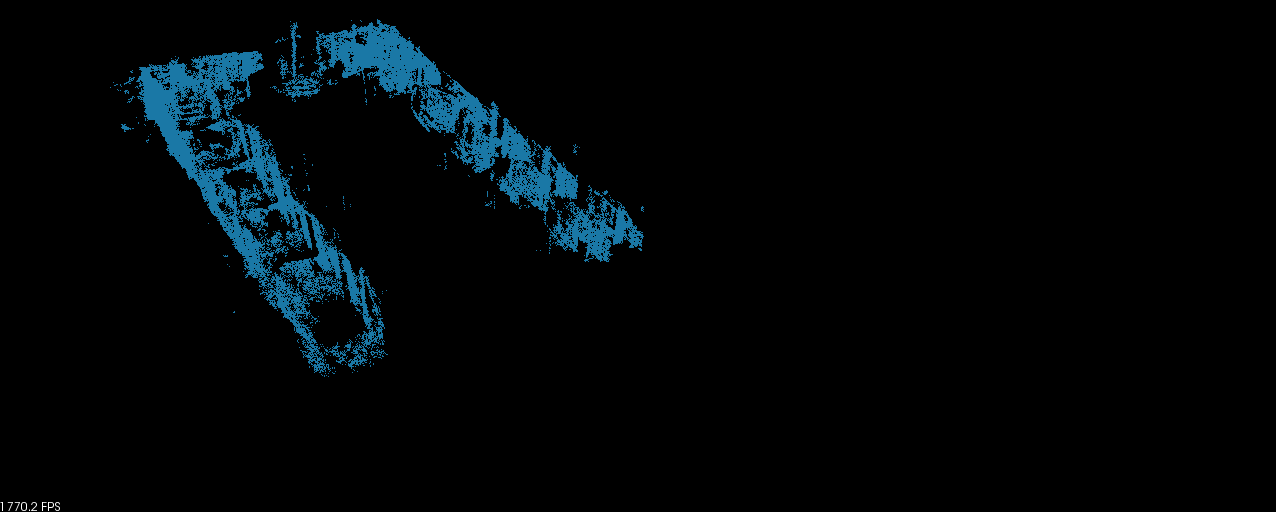

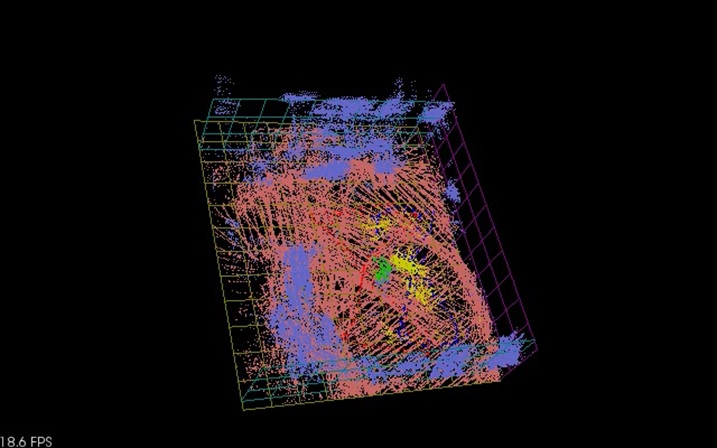

Image 1 (register.cpp v1): No pre-processing; only odometry estimates--ca. 1.8 million points

Image 2 (register.cpp v2): statistical removal and downsampling of each individual point cloud--ca. 1.4 million points

hallway2_screenshots.tar.bz2

* Set ALLOWTOPICVIEW = FRCVRoboticsGroup

hallway2_screenshots.tar.bz2

* Set ALLOWTOPICVIEW = FRCVRoboticsGroup* Set ALLOWTOPICCHANGE = FRCVRoboticsGroup -- StephenFox - 2011-06-15

Effect of Field of View in Stereovision-based Visual Homing

D.M. Lyons, L. Del Signore, B. Barriage

Abstract

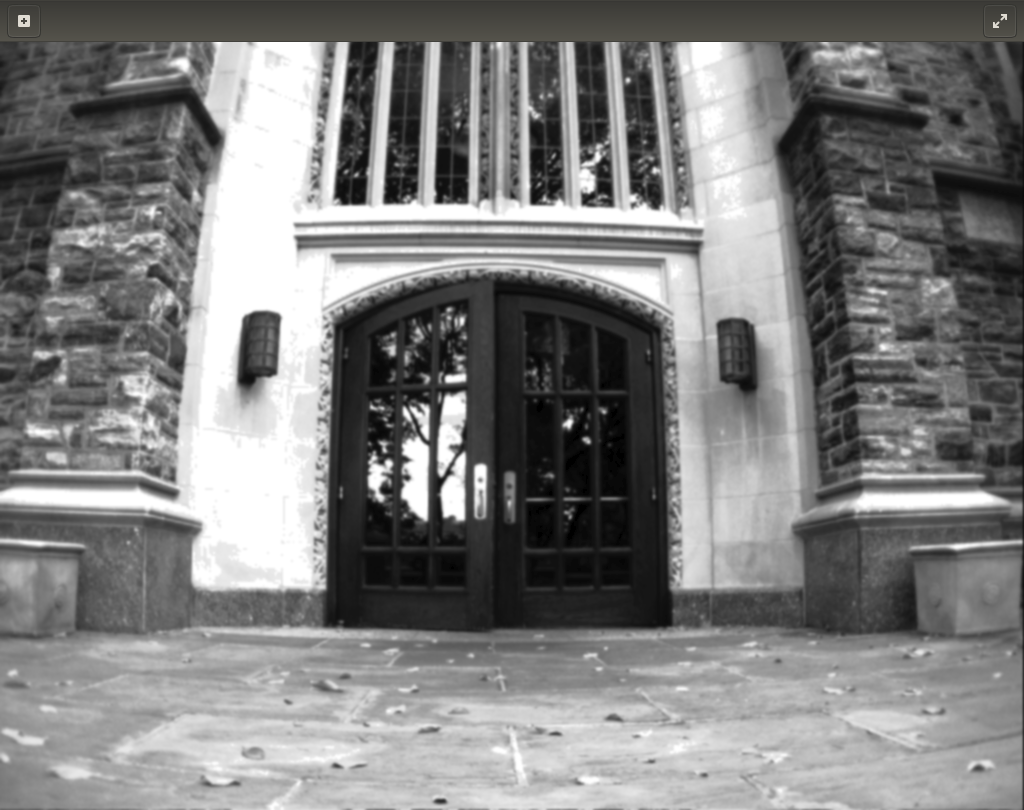

Navigation is challenging for an autonomous robot operating in an unstructured environment. Visual homing is a local navigation technique used to direct a robot to a previously seen location, and inspired by biological models. Most visual homing uses a panoramic camera. Prior work has shown that exploiting depth cues in homing from, e.g., a stereo-camera, leads to improved performance. However, many stereo-cameras have a limited field of view (FOV). We present a stereovision database methodology for visual homing. We use two databases we have collected, one indoor and one outdoor, to evaluate the effect of FOV on the performance of our homing with stereovision algorithm. Based on over 100,000 homing trials, we show that contrary to intuition, a panoramic field of view does not necessarily lead to the best performance, and we discuss the implications of this.Database Collection Methodology

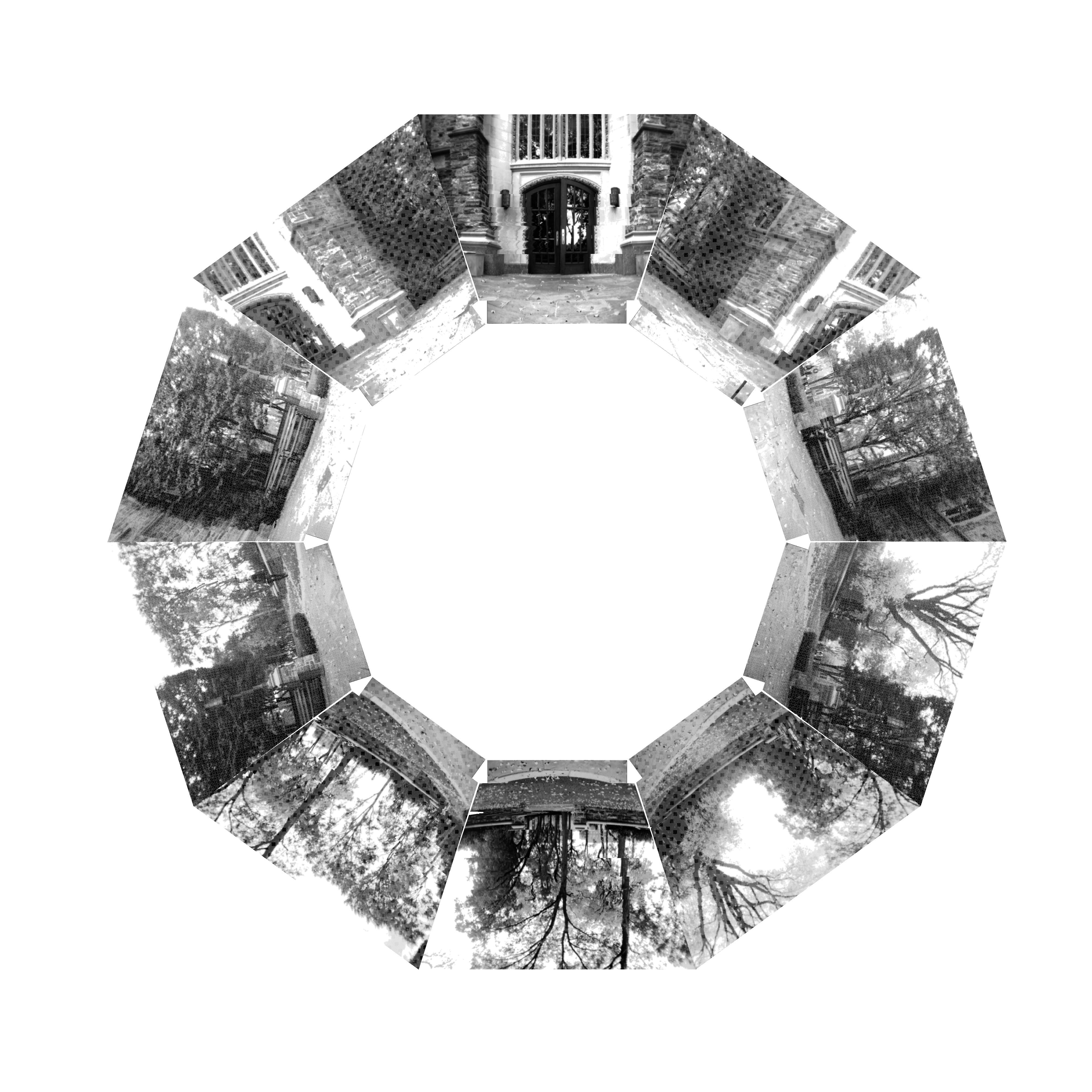

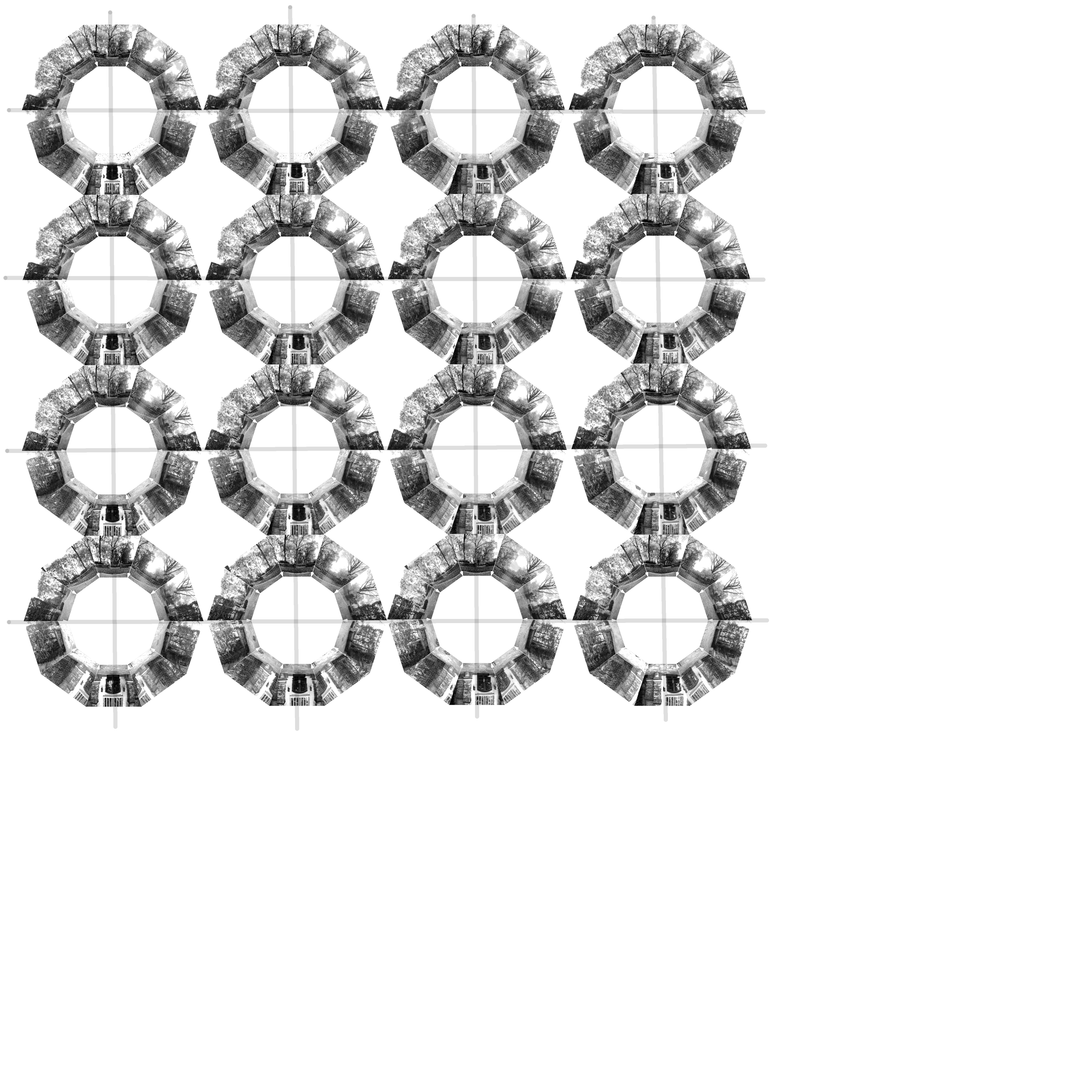

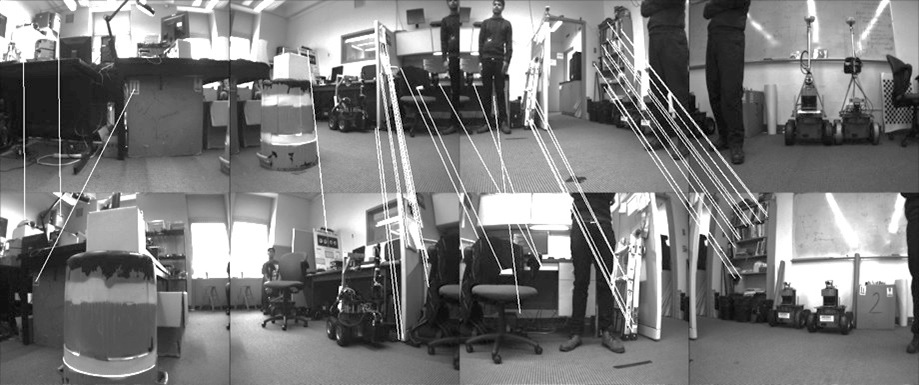

The robot platform used by Nirmal & Lyons [1] was a Pioneer 3AT robot with Bumblebee2 stereo-camera mounted on a Pan-Tilt (PT) base. The same platform is used to collect stereo homing databases for this paper. As in prior work [2], a grid of squares is superimposed on the area to be recorded, and imagery is collected at each grid location. We need to collect a 360 deg FOV for the stereo data at each location. This will allow us to evaluate the benefit of FOVs from 66 deg up to 360 deg. The Bumblebee2 with 3.8 mm lens has a 66 deg horizontal FOV for each camera. The PT base is used to rotate the camera to construct a wide FOV composite stereo image. Nirmal & Lyons construct this image by simply concatenating adjacent (left camera) visual images and depth images into single composite visual and depth image.This is quicker than attempting to stitch the visual images and integrate the depth images, and Nirmal & Lyons note the overlap increases feature matching – a positive effect for homing. The PT base is used to collect 10 visual and depth images at orientations 36 deg apart starting at 0 deg with respect to the X axis. The RH coordinate frame has the X axis along the direction in which the robot is facing, the Y pointing left, and is centered on the robot. The final angle requires the robot to be reoriented also due to pan limits on the PT unit. The overlap in horizontal FOV (HFOV) between adjacent images is approximately 50%. Each visual image is a 1024x768 8-bit gray level image, and each depth image is a listing of the (x, y, z) in robot-centered coordinates for each point in the 1024x768 for which stereo disparity can be calculated. The visual images and depth files are named for the orientation at which they were collected. The visual images are histogram equalized, and the stereo images statistically filtered, before being stored. The grid squares for a location are numbered in row-major order i in { 0, ..., nxn } with a folder SQUARE_i containing the 10 visual and 10 depth images for each. The resolution of the grid r is the actual grid square size in meters and is used to translate any position p = (x, y) in { (0,0), ..., ((n-1)r, (n-1)r) } to its grid coordinates and hence to a SQUARE_i folder. The orientation of the robot theta is used to determine which images to select. For example, in the Nirmal & Lyons HSV algorithm, at orientation theta, the image at (theta div 36)*36 is the center image, and two images clockwise (CW) and two counterclockwise (CCW) are concatenated into a 5 image wide composite image for homing. Of course the database can be used in other ways -- the images could be stitched into a panorama for example.Databases

The results in this paper were produced with two stereo databases: one for an indoor, lab location (G11) and one for an outdoor location (G14). For both databases, r=0.5 m and n=4.

Figure 1: Grid 14 (left), Grid 11 (right), representative images

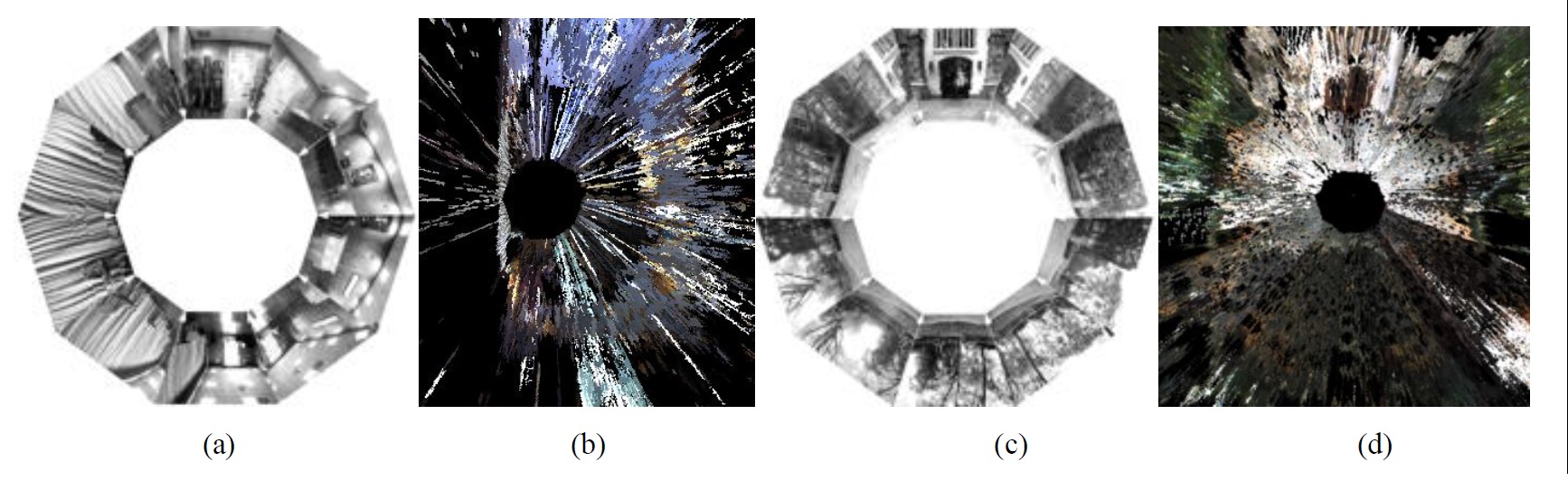

Figure 2: (a) Set of visual images displayed (keystone warped only for display purposes) at the orientation they were taken for a single square on the G14 database and (b) point cloud visualization of the stereo depth information for the same square.

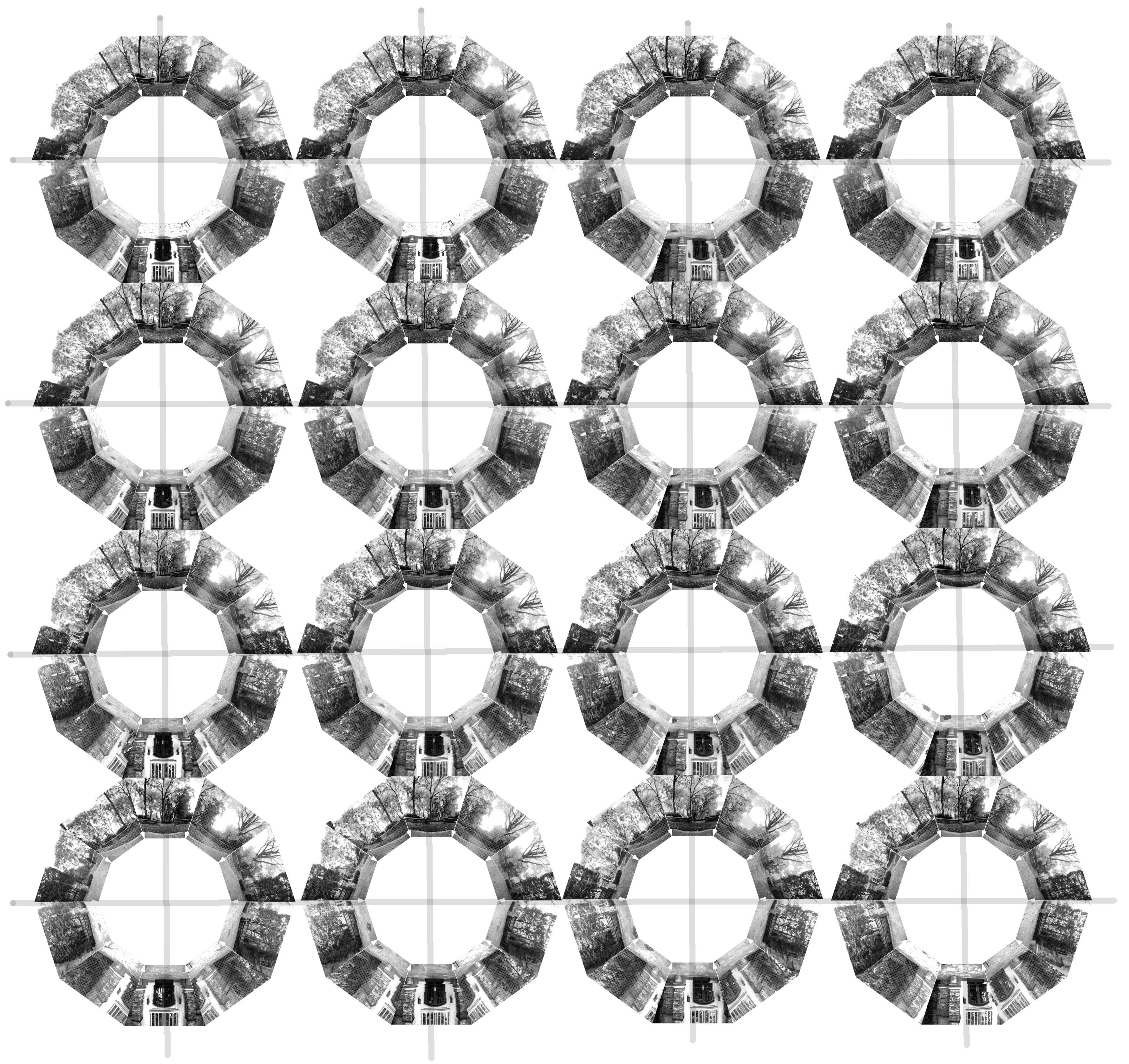

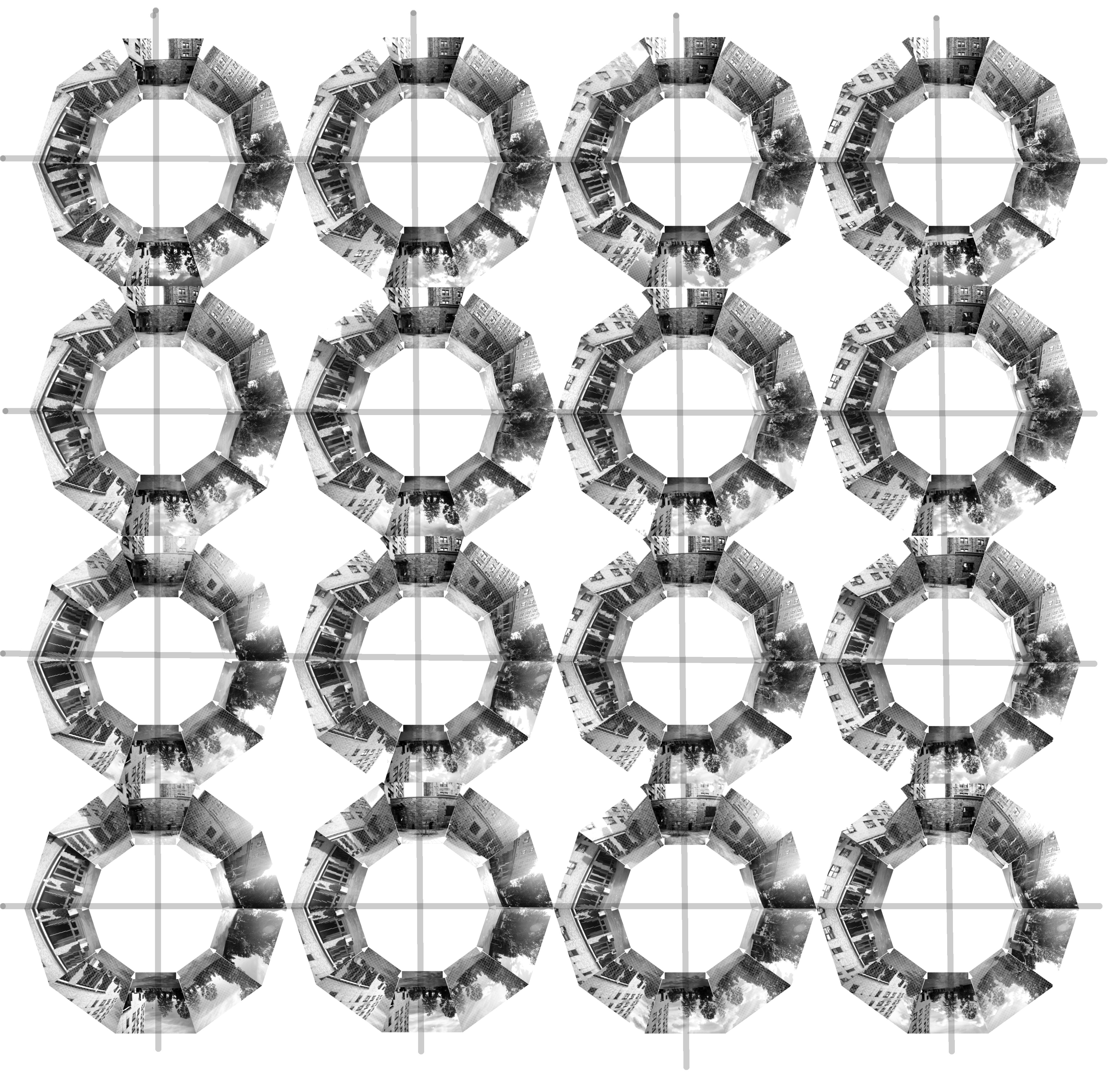

Figure 3: Grid of images for the G14 location. Each square shows the 10 visual images for that grid square in the same format as Figure 2(a).

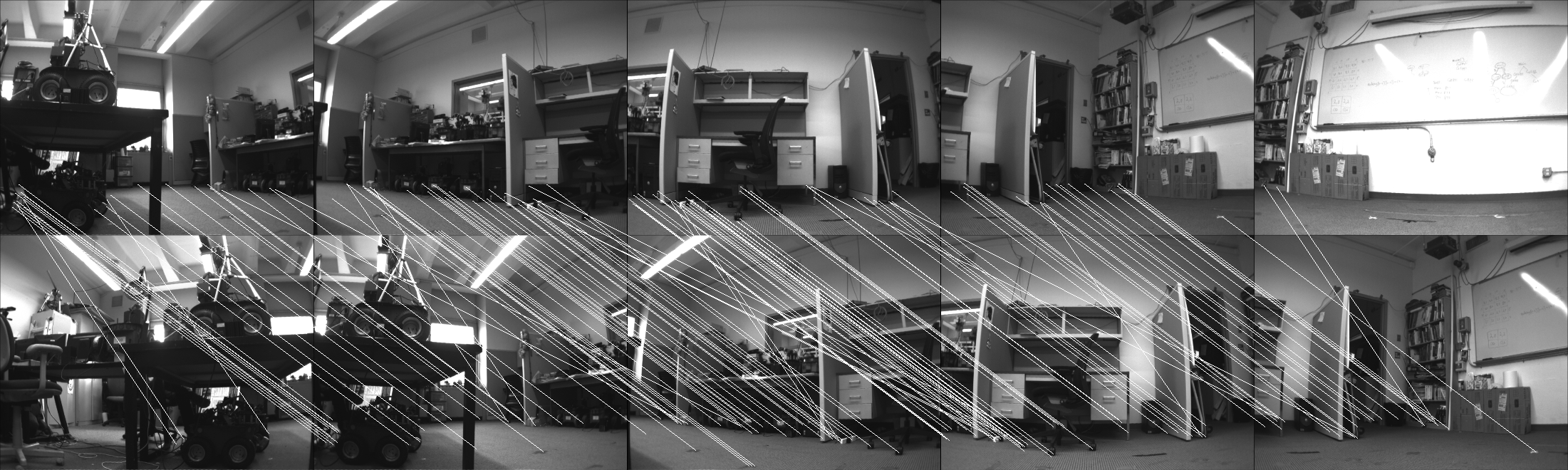

Figure 5: Wide field of view SIFT matching of home image (top) and current image (bottom) with lines between matched features (G11 database).

Repository Information

1. Files below labelled GRIDXX_grids contain visual image folders, while those labelled GRIDXX_cleanDump contain stereo depth data. 2. Histogram smoothing was performed on the visual images of GRID14, an outdoor grid, in order to compensate for lighting conditions. 3. A statistical filtering program, from the point cloud library, was executed on stereo-depth to clean up some stereo noise data. The parameters of the point cloud statistical filter were a meanK of 50 and a standard deviation threshold of 0.2.Datasets

* GRID11_cleanDump.tar.gz: Clean Data Dump folder for HSVD project. 11/7/16* GRID14_cleanDump.tar.gz: Clean Data Dump folder for HSVD project. 11/7/16

* GRID14_grids.tar.gz: Grid folder for HSVD project post smoothing. 12/5/16

* GRID11_grids.tar.gz: Grid folder for HSVD project. 2/23/17 This data is provided for general use without any warranty or support. Please send any email questions to dlyons@fordham.edu, bbarriage@fordham.edu, and ldelsignore@fordham.edu.

References

[1] P. Nirmal and D. Lyons, "Homing With Stereovision," Robotica , vol. 34, no. 12, 2015. [2] D. Churchill and A. Vardy, "An orientation invarient visual homing algorithm," Journal of Intelligent and Robotics Systems, vol. 17, no. 1, pp. 3-29, 2012.Permissions

* Persons/group who can change the page:* Set ALLOWTOPICCHANGE = FRCVRoboticsGroup -- (c) Fordham University Robotics and Computer Vision

The Evaluation of Field of View Width in Stereovision-based Visual Homing: Data repository

D.M. Lyons, B. Barriage, L. Del Signore,

Abstract of the paper

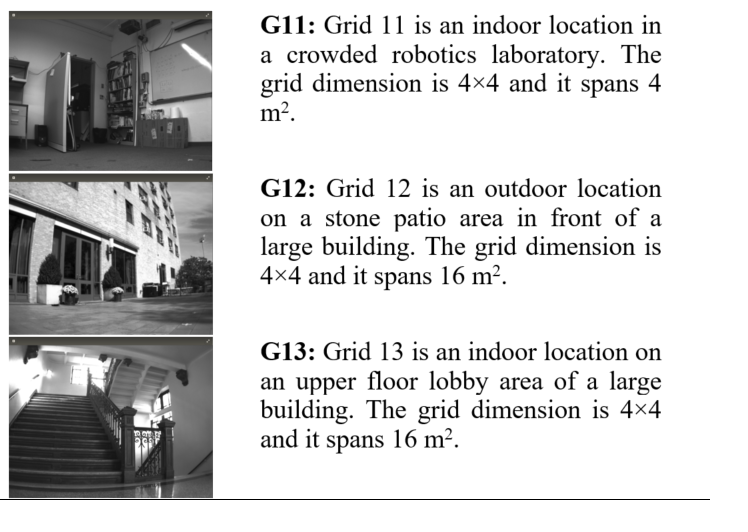

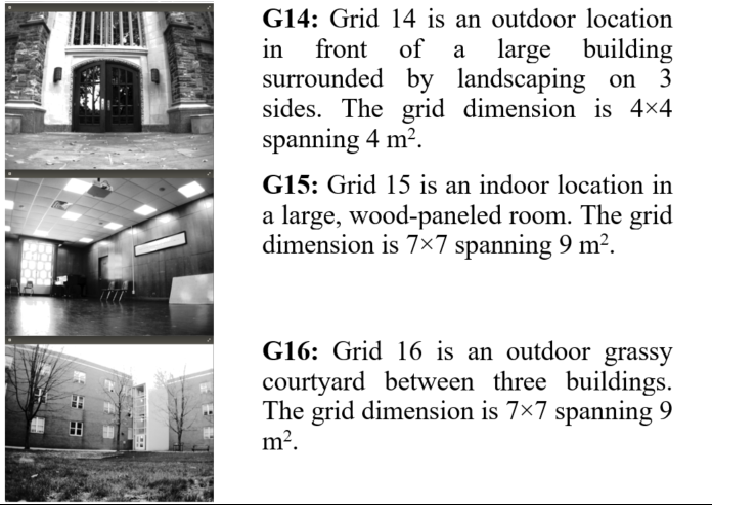

Visual homing is a bioinspired, local navigation technique used to direct a robot to a previously seen location by comparing the image of the original location with the current visual image. Prior work has shown that exploiting depth cues such as image scale or stereo-depth in homing leads to improved homing performance. In this paper, we present a stereovision database methodology for evaluating the performance of visual homing. We have collected six stereovision homing databases, three indoor and three outdoor. Results are presented for these databases to evaluate the effect of FOV on the performance of the Homing with Stereovision (HSV) algorithm. Based on over 350,000 homing trials, we show that, contrary to intuition, a panoramic field of view does not necessarily lead to the best performance and we discuss why this may be the case.Overview of the Data Repository

This web page is a repository for six stereovision visual homing database inspired by the panoramic image visual homing databases of Möller and others. Using these databases, we have conducted an evaluation of the effect of FOV on the performance of Nirmal & Lyons's HSV homing algorithm for a variety of visual homing missions in both indoor and outdoor situations totaling over 350,000 visual homing trials. The results indicate that while in general a wide FOV outperforms a narrower FOV, peak performance is not achieved by a panoramic FOV, and we discuss why that may be the case. This web is the data repository for the databases used in the paper. The six databases are briefly overviewed in Table 1 below. The picture to the left on each row is a single representative picture from the database.

Data Collection Procedure

A spatial grid is superimposed on the area to be recorded, and panoramic visual imagery is collected at each grid cell. The information is used as follows. A robot begins a simulation run with a home position grid cell and a start position grid cell. The visual information the robot receives is the stored imagery for the grid cell it occupies. A motion vector is calculated by comparing this imagery to the home grid cell imagery. This motion may move the robot to another grid cell, and the imagery comparison and motion calculation continues. Homing algorithms different in how these comparisons are done, how the motion vector is calculated, and how termination is decided. The robot used in our visual homing research is a Pioneer 3-AT robot with a Bumblebee2 stereo-camera mounted on a Pan-Tilt (PT) base. The Bumblebee2 with 3.8 mm lens has a 66 deg horizontal FOV for each camera. This limited FOV is one key challenge in building a visual homing database since each grid cell in the database must contain 360 deg of imagery; A robot can arrive at a grid location with any orientation and the simulation should be able to retrieve the imagery for that orientation. This issue is addressed by using the PT base to collect 10 visual and stereo depth images at orientations 36 deg apart, starting at 0 deg with respect to the X axis. The visual image stored is the left image from the stereo pair. The RH coordinate frame has the X axis along the direction in which the robot is facing, the Y pointing left, and is centered on the robot. The final angle requires the robot to be reoriented (in addition to the PT unit motion) due to PT unit pan limits.

Figure 1: (a, c) Set of visual images (displayed keystone warped only for display purposes) for a single square on the G15 and G14 databases and (b, d) point cloud display of all the stereo depth information from directly overhead (the XY plane) for the same squares, respectively.

Figure 2: Grid of all 160 images for the G12 and G14 databases. Each grid cell shows the 10 visual images for that cell in the same format as Figure 1(a), and for each database the cells are arranged in the spatial order and orientation they were collected.

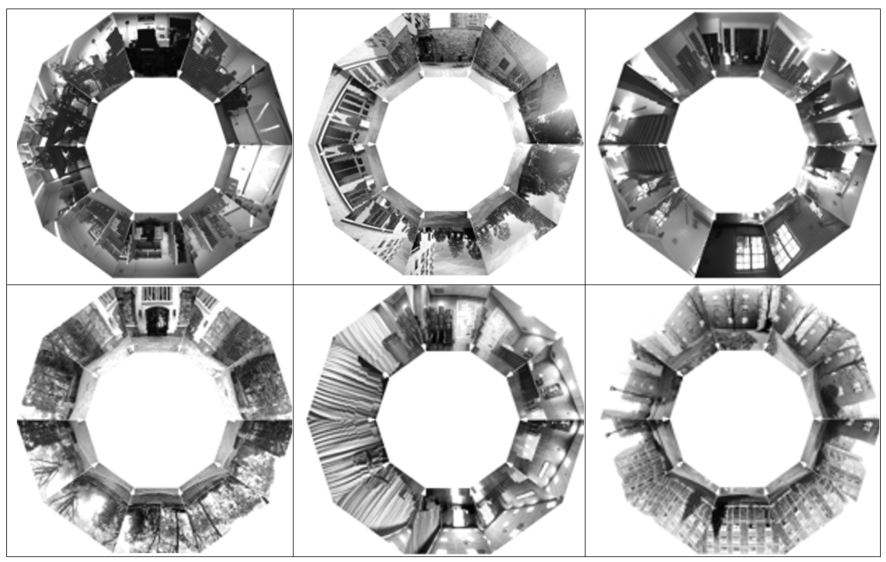

Figure 3: Set of all 10 visual images (displayed keystone warped only for display purposes) for a single cell from the G11 (top left) through G16 (bottom right)) databases respectively.

Figure 3.4: (left) A composite point cloud created from all 160 clouds (each in the form Figure 1(b)) of G14 and registered only using the spatial relationship of the grid; (right) shows this for the 490 clouds in G16. The grid of darker circles superimposed on the data in the foreground of each shows the grid vertices

A note on folder naming and orientation labeling orientation labeling

The depth files and the visual files are stored separately. For example, the depth files for G11 are stored in a folder called GRID11 with subfolders SQUARE1 through SQUARE16. The visual data for G11 is also stored in a folder called GRID11 with subfolders SQUARE1 through SQUARE16. So to download the the data, its best to make two folders Visual and Depth (for example) and download all the visual data to one and the depth to the others -- since the visual and depth folders for each database otherwise will have the same name! Each set of visual images for a cell is 7.5 MB in size and each set of depth images is 200 MB on average. In the Visual folder GRID11, subfolders SQUARE1 to SQUARE16, the images are labelled IMAGEnnn.pgm where nnn is 0, 36, 72, 108, 144, 180, 216, 252, 288, 324. These angles are with respect to the full positive pan angle, so 0 is actually 180 deg with respect to the X axis of the robot coordinate frame. In the Depth folder GRID11, subfolders SQUARE1 to SQUARE16, the images are labelled dataDump_nnn_mmm.txt and these are text files. Each line the text file has the entries:X,Y,Z are the 3D coordinates of the point with respect to the robot coordinate frame,

u,v are the image coordinates for the point (and used to register this point with the visual image)

d is the stereo disparity

r, g, b is the pixel color. The number is as follows: nn is 0, 36, 72, 108, 144, -144, -108, -72, -36 and where mm is 0 for all except one file which is nnn=-144 and mmm=-36 which marks the one rotation which the pan unit was at its max -144 and the robot based was rotated -36 to bring the robot to 180. The angle nnn in this case is with respect to the X axis of the robot coordinate frame. Its awkward that this is not the same labeling as for the visual data, and we will revise that in the next release. Histogram smoothing was performed on the visual images of all outdoor grids, in order to compensate for lighting conditions. A statistical filtering program, from the point cloud library, was executed on all stereo-depth files to clean up some stereo noise. The parameters of the point cloud statistical filter were a meanK of 50 and a standard deviation threshold of 0.2.

Datasets

The visual data

* grid11Image.tar.gz: Grid 11 4x4 grid image data.* grid12Image.tar.gz: Grid 12 4x4 grid, image data.

* grid13Image.tar.gz: Grid 13 4x4 grid, image data.

* grid14Image.tar.gz: Grid 14 4x4 grid, image data.

* grid15Image.tar.gz: Grid 14 7x7 grid, image data.

* grid16Image.tar.gz: Grid 16 7x7 grid, image data.

The depth data

* grid11Depth.tar.gz: Grid 11 4x4 grid depth data.* grid12Depth.tar.gz: Grid 12 4x4 grid, depth data.

* grid13Depth.tar.gz: Grid 13 4x4 grid, depth data.

* grid14Depth.tar.gz: Grid 14 4x4 grid, depth data.

* grid15Depth.tar.gz: Grid 14 7x7 grid, depth data.

* grid16Depth.tar.gz: Grid 16 7x7 grid, depth data. This data is provided for general use without any warranty or support. Please send any email questions to dlyons@fordham.edu, bbarriage@fordham.edu, and ldelsignore@fordham.edu. The short URL for this page is http://goo.gl/h3pU7Q

Permissions

* Persons/group who can change the page:<br /> * Set ALLOWTOPICCHANGE = FRCVRoboticsGroup -- (c) Fordham University Robotics and Computer Vision

| Evaluation of Field of View Width |

in Stereo-vision based Visual Homing

Overview

This dataset was collected by dml on 6-14-11 using the stereoServer and modified clientDemo software. Robot 116 was run around the U shaped 3rd floor of JMH.Procedure

Robot 116 Pioneer AT3 with Bumblebee stereo and DPPU pan-tilt was used to collected this dataset. The robot was equipped with onboard wireless AP to connected to control laptop running the modified clientDemo that speaks to stereoServer. StereoServer version V2 was used to control robot 116. The robot was positioned at the Robot Lab end of the U shaped 3rd floor of Building JMH facing down the corridor. Then stereoServer was started. The pan tilt scan parameters were set to do a pan scan of 20 deg, 0 degrees and -20 degrees at a fixed tilt of 5 degrees. Each set of 3 scans was repeated approx every 5 meters along the U shaped corridor until the robot reached the end. There were 15 stops (labelled 0 to 14) in all, and the sequence of 3 pan angles was scanned at each stop, producing 45 stereo datasets.Dataset

The dataset consists of The logfile which documents: the file name of the stereodataset, the (odometry) x,y,z,th and pan,tilt at which the dataset was taken.- run10839334_S0_S3.rar: run10839334_S3_S0.rar

- run10839334_S6_S4.rar: run10839334_S6_S4.rar

- run10839334_S10_S7.rar: run10839334_S10_S7.rar

- run10839334_S11_S14.rar: run10839334_S11_S14.rar

Fordham Robotics and Computer Vision Laboratory - Demos and Software

Rotational Legged Locomotion Pictures, Videos for novel triped robot. Robot "imagination" Pictures, Videos for using a 3D simulation to 'imagine' what might happen Terrain Spatiograms for Landmarks Pictures and code for generating unique 3D spatiogram views of landmarks from point cloud data. Instructions for Demo Step by step process of how to properly turn on robot, log into robot, and run demo files. Visual Homing using stereo-vision demo. Includes source code and instructions on how to run the demo. Old FRCV Lab Software and Demos pagePage protections

- Persons/group who can view/change the page:

- Set ALLOWTOPICCHANGE = FRCVRoboticsGroup

Major Equipment: The Robotics & Computer Vision Lab at Fordham includes the following major equipment:

RESOURCES: Fordham University, Robotics and Computer Vision Lab Laboratory: The Robotics & Computer Vision Laboratory is in the Department of Computer & Information Science in Fordham University. The Lab includes several computing stations and servers with high-powered GPUs, many robot platforms including ground robots and drones, moving and stationary camera systems and other infrastructure for development, maintenance and repair of the platforms. Computer: Fordham University has an extensive, multi-campus computing environment. The Department of Computer & Information Science has its own subnet connected to the main network. Department resources also span campuses and include multiple Windows and Linux workstations, six departmental servers, two HPC research clusters and full-time computing support staff. Major Equipment: The Robotics & Computer Vision Lab at Fordham is a large indoor lab (25’ ×30’) with multiple student working locations and which includes the following major equipment: Robots:- 5 Pioneer AT-3s with SICK laser, PTZ camera, compass, gyro, onboard computer with WiFi

- 6 Pioneer AT-3s with digital stereo-cameras on Pan-Tilt base, compass, gyro, onboard computer with WiFi

- 5 Pioneer AT-3s with PTZ camera, ea. With 5-DOF arm, compass, gyro, onboard computer with WiFi

- 1 Pioneer DX-2 with fixed stereo-camera

- >10 Crazyflie drones equipped with Flowdecks & Loco positioning system for drone localization

- 2 Parrot drones

- 6 ROS turtlebots (4 Burger Pi, 1 Burger, 1 Waffle Pi)

- Persons/group who can change the list:

- Set ALLOWTOPICCHANGE =FRCVLabGroup

Fordham Robotics & Computer Vision

Laboratory TWIKI

| Web | Description | Links |

|---|---|---|

| TWiki home with users and groups for access control | ||

| TWiki documentation, welcome guest and user registration | ||

| Sandbox web to experiment in an open hands-on area | ||

| |

||

| Legend: |

|

Permissions

- Persons/group who can view/change the page:

- Set ALLOWTOPICCHANGE = FRCVRoboticsGroup

» FRCVLabGroup

Use this group for access control of webs and topics.- Member list:

- Set GROUP = TWikiAdminUser, DamianLyons, LabTech, MaggieGates

- Purpose of this group:

- Set DESCRIPTION =

- Persons/group who can change the list:

- Set ALLOWTOPICCHANGE = FRCVLabGroup

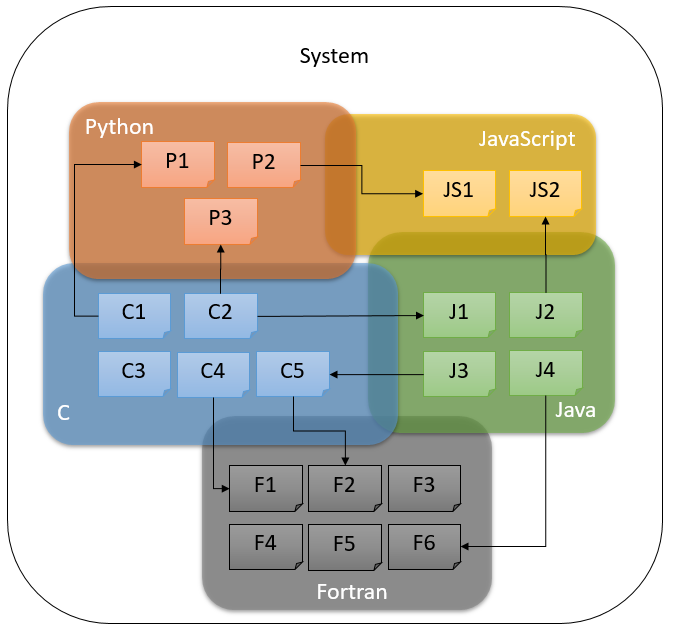

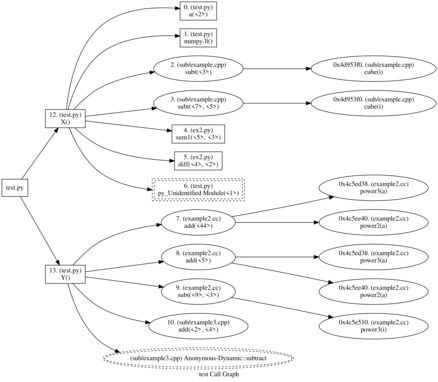

MultiLingual Static Software Analyis

MultiLingual Static Software Analyis

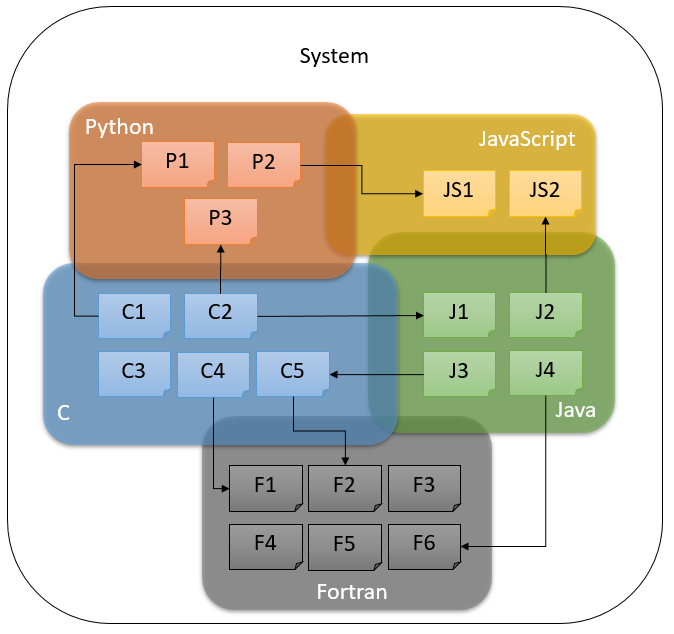

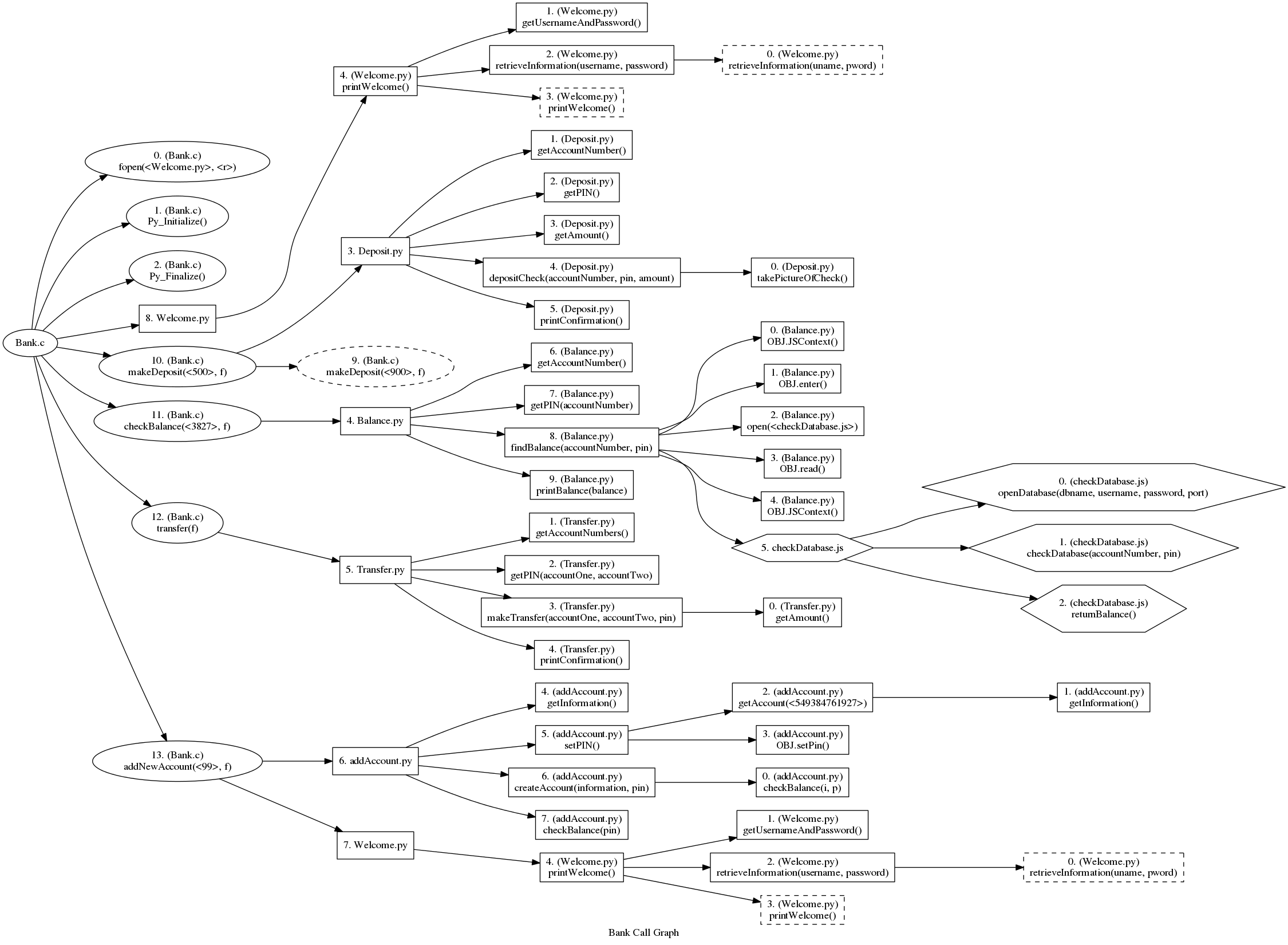

Our objective is to provide open-source tools that help analyze the way multilingual code interoperates to address security issues, software design and refactoring, efficiency and correctness. The first step is to create call graphs that represent the relationship between C/C++, Python, and JavaScript programs. The MultiLingual Static Software Analysis software tool (MLSA, pronounced Melissa for convenience) is a tool that analyzes software that is written in multiple languages and in which the languages call each other and produces a multi-lingual call graph.

The MLSA software tool reviews function (procedure) calls within a set of source code files. It generates a call graph in csv/graphviz format with formatted information about function calls and their arguments and what files they are in. The tool is currently capable of analyzing programs in C/C++, Python and JavaScript, and in which a C/C++ program calls Python code through the python.h interface, a Python program calls C/C++ procedure using pybind11 interface, a Python program calls JavaScript code through PyV8 's eval function, or a JavaScript program calls Python code through JQuery's ajax command. The result in all cases is a call graph that includes procedures in all three languages showing their mutual call relationships. For more details, read on.

Background

Architecture

System Requirements

Installation

Execution

IG/repostats.py

IG/cFunCall2.py

Filters and Pipelines

Data Files

Status per Module

Known Issues for Version 0.1

Future Work

Ongoing Work

Permissions

Persons/group who can view/change the page:- Set ALLOWTOPICCHANGE = FRCVRoboticsGroup

- multilingual system:

Background

Background

Large software projects may typically have components written in different languages. Companies that have a large software codebase may face the issue of applying security, efficiency and quality metrics for a product spanning many languages. A developer or developer organization may choose one language for numerical computation and another for use interface implementation, or they may have inherited or be mandated to work with legacy code in one language while extending functionality with another. While there are many such drivers promoting multilingual codebases, they come with significant software engineering challenges. Although a software development environment might support multiple languages (e.g., Eclipse IDEs) it may leave the language boundaries - language interoperability - opaque. While it may be possible to automatically inspect individual language components of the codebase for software engineering metrics, it may be difficult or impossible to do this on a single accurate description of the complete multilingual codebase.

Heterogeneous or multilingual codebases arise in many cases because software has been developed over a long period by both in-house and external software developers. Libraries for numerical computation may have been constructed in FORTRAN, C and C++ for example, and front-end libraries may have been built in JavaScript.

A multilingual codebase gives rise to many software engineering issues, including:

- Redundancy, e.g., procedures in several different language libraries for the same functionality, necessitating refractoring

- Debugging complexity as languages interact with each other in unexpected ways

- Security issues relating to what information is exposed when one language procedure is called from another

Permissions

- Persons/group who can view/change the page:

- Set ALLOWTOPICCHANGE = FRCVRoboticsGroup

Data Files

Data Files

There are three kinds of data files in MLSA:

- data files that contain a monolingual Abstract Syntax Tree (AST) in text format

- data files the contain monolingual AST in JSON format

- data files in comma separated values format (CSV) that contain the results of various kinds of static analysis

- AST files: NAME.X_ast.txt or NAME.X_ast.json

- Monolingual procedure call graph files: NAME.X_call.csv

- Monolingual procesure call graph files with API integration: NAME.X_finalcall.csv

- Combined multilingual call graph file: NAME_callgraph.csv

- Combined function file: NAME_funcs.csv

- Forward flow control file: NAME.X_fcfg.csv

- Reverse flow control file: NAME.X_rcfg.csv

- Monoligual variable assignments: NAME.X_vars.csv

- Monolingual reaching definitions analysis: NAME.X_rda.csv

- NAME.X_call.csv

- call id, class, scope, function name, argument1, argument2...

- NAME.X_finalcall.csv

- call id, class name, scope, function called, argument1, argument2...

- NAME_callgraph.csv

- call program name, call program type, function program name, call id, class name, scope, function called, argument1, argument2...

- NAME_funcs.csv

- program name, class name, function name, number of parameters

Permissions

- Persons/group who can view/change the page:

- Set ALLOWTOPICCHANGE = FRCVRoboticsGroup

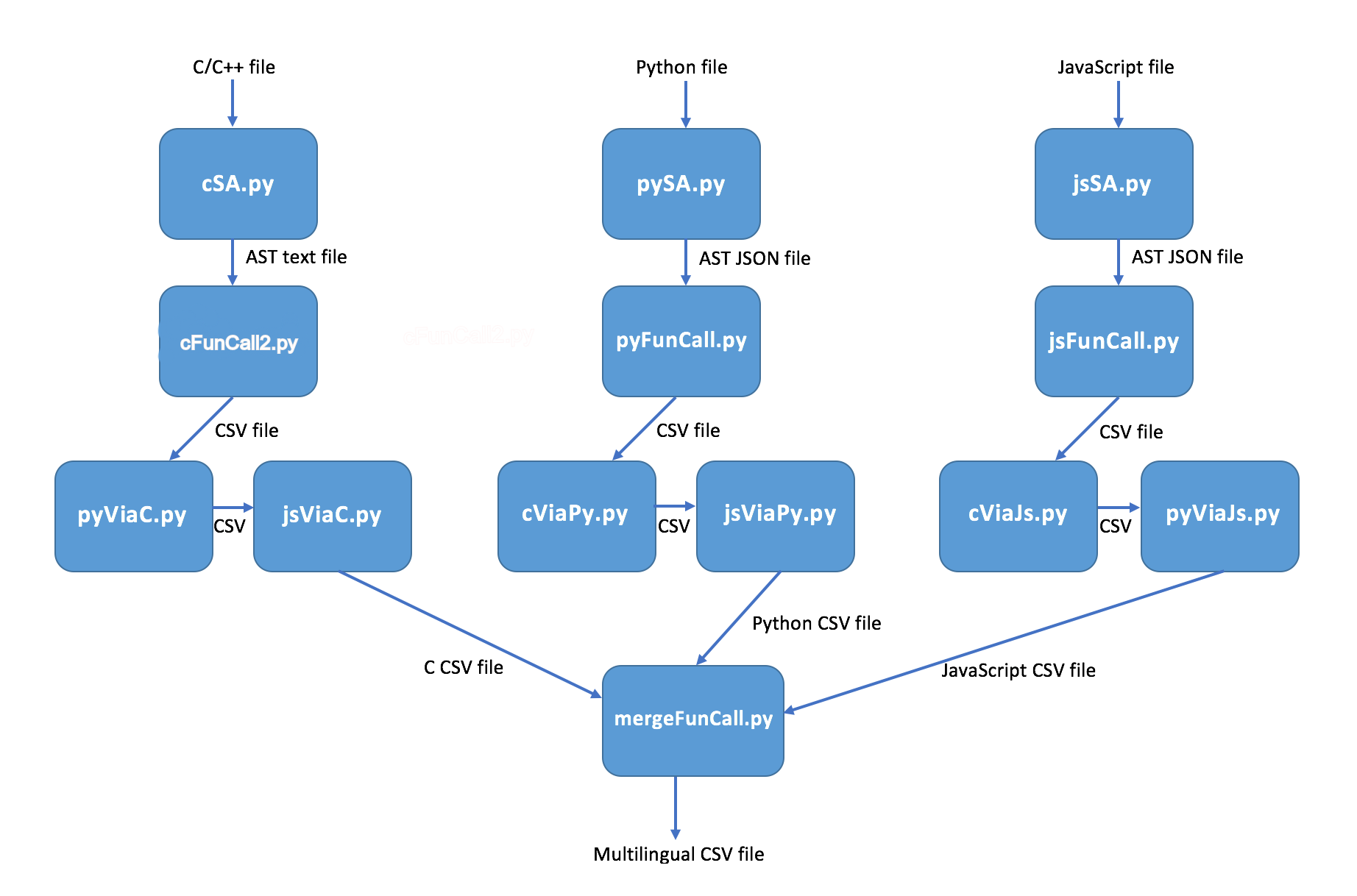

Filters and Pipelines

Filters and Pipelines

AST file generation

- Clang-check is used to generate AST files for C and C++ programs

- The Python AST library is used to generate AST for Python programs

- SpiderMonkey is used to generate the AST for JavaScript programs

Monolingual procedure call filters

- cFunCall.py reads a NAMCE.c_ast.txt (or cpp) file and generates a NAME.c_call.csv file containing the function call information in the file, while also adding to a shared file NAME_funcs.csv that collects information about all the functions defined in the program.

- pyFunCall.py reads a NAME.py_ast.json file and generates a NAME.py_call.csv file containing the function call information in the file, while also adding to a shared file NAME_funcs.csv that collects information about all the functions defined in the program.

- jsFunCall.py reads a NAME.js_ast.json file and generates a NAME.js_call.csv file containing the function call information in the file, while also adding to a shared file NAME_funcs.csv that collects information about all the functions defined in the program.

Interoperability filters

- pyViaC.py reads a C function call file NAME.c_call.csv and scans for Python interoperability. Currently it only implements the Python.h PyRun _SimpleFile API. It ouputs a revised csv function call file NAME.c_finalcall.csv.

- jsViaPy.py reads a Python function call file NAME.py_call.csv and scans for JavaScript interoperability. Currently it only implements the PyV8 eval API. It outputs a revised csv function call file NAME.py_finalcall.csv.

- pyViaJs.py reads a JavaScript function call file NAME.js_call.csv and scans for Python interoperatbility. Currently it only implements the JQuerry ajax API. It outputs a revised csv function call file NAME.py_finalcall.csv.

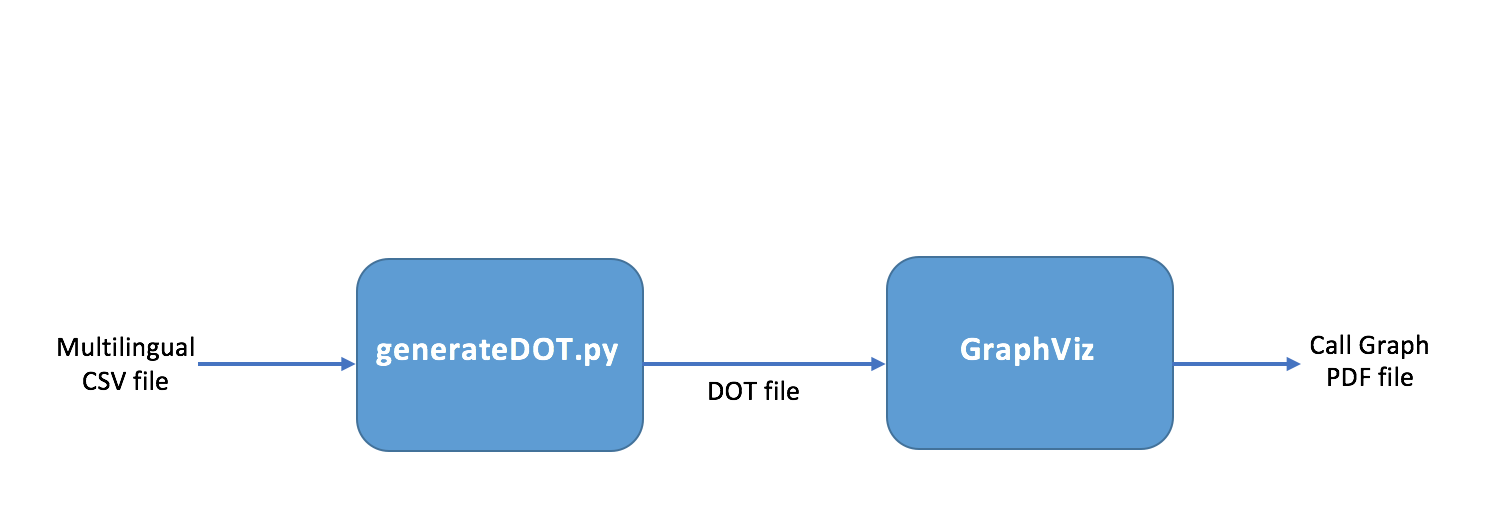

Multilingual combination and graphin filters

- mergeFunCall.py merges the function calls in the XX_finalcall.csv files into a single function call file. It also finds the interoperability of python programs calling C/C++ procedures(pybind11). When called from mlcg.py, this output is given the name of the first argument to mlcg.py, e.g. if the argument was test0, then the file is called test0_callgraph.csv.

- Pybind11:

- MLSA processes all the call.csv files of the programs(C++, Python) and generates a merged csv file that shows the interoperability of programs by showing all function calls (shows where a function is defined and where is it called).

- In Python csvfile, it sees function calls , if it is of type 'A.B' it splits it and tries to find for a module/file named A and searches for the function definition in its csv file –'B' (using funcs.csv). It then adds the function call program name as 'A' and if the module appears to be a C++ file ,it tries to find if 'B' is present as the first argument of “OBJ.def” . If that is the case it replaces 'B' to the function name present in the second argument of “OBJ.def”in the csvfile.

- generateDOT.py produces a PDF file from a call graph csv file displaying the call graph.

Flow Control Filters

- cFlowControl.py reads an AST file NAME.c_ast.txt (or cpp) and generates a csv file containing the forward flow control information NAME.c_fcfg.csv, and reverse control flow information NAME.c_rcfg.csv.

- pyFlowControl.py - does not currently exist

- jsFlowControl.py - does not currently exist

Assignment collectors

- cAssignmentCollector.py reads the C AST file and locates all variable assignments and their line numbers. This provides an input that can be used in various kinds of assignment analysis. It is currently only used in the RDA analysis. It can currently also implement two simple static evaluation functions:

- It can report the assignment of a literal to a variable

- It can detect the use of strcpy in a C program to set a character array to a literal

- Anything else it marks as an expression or a function call

- pyAssignmentCollector.py - does not currently exist

- jsAssignmentCollector.py - does not currently exist

Reaching Definitions

- RDA (Kill-Gen/Exit-Entry) implements a reaching definitions analysis for each variable in the program. It reads the NAME.c_vars.csv file for the program to identify all variable assignments, and it reads the NAME.c_rcfg.csv file to get the reverse control flow for the program. It generates the file NAME.c_rda.csv with the solutions for the line entry sets. It doesnt record the exit set solutions, but it does derive them.

- cRDAGroup.py sets up the RDA pipeline of cAssignmentCollector, cFlowControl, and the RDA.

- pyRDAGroup.py - does not currently exist

- jsRDAGoup.py - does not currently exist

Pipelines

- cSA.py sets up the call graph and RDA pipeline for C/C++ sources

- pySA.py sets up the call graph pipeline for Python sources

- jsSA.py sets up the call graph pipeline for JavaScript sources

- mlcg.py processes its argument list of files and folders, calling cSA, pySA, jsSA as necessary to product a combined multilingual call graph.

Permissions

- Persons/group who can view/change the page:

- Set ALLOWTOPICCHANGE = FRCVRoboticsGroup

Architecture

Architecture

Lightweight program (which we call filters) operate on program source files and/or data files and produce data files. The filters can be stacked in pipelines, where each filter in the pipeline reads data files generated by prior filters and in turn generates new data files. The design motivation behind this structure is to allow pipelines of filter programs to be constructed to implement program analysis. This modular design is important to isolate the language-specific first pipeline stages from later language-independent modules and in this way support sophisticated analysis for multilingual codebases.

The process starts with C, Python and JavaScript source code from which separate ASTs (Abstract Syntax Tree) are dumped using Clang-Check (for C files)

The process starts with C, Python and JavaScript source code from which separate ASTs (Abstract Syntax Tree) are dumped using Clang-Check (for C files)

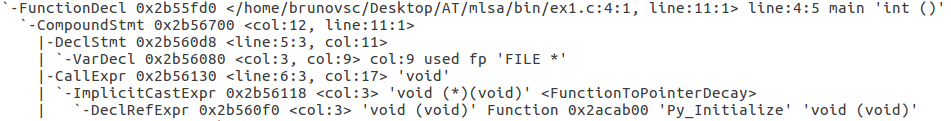

Figure 1: Portion of a C AST file

the AST module and the file ast2json.py

Figure 1: Portion of a C AST file

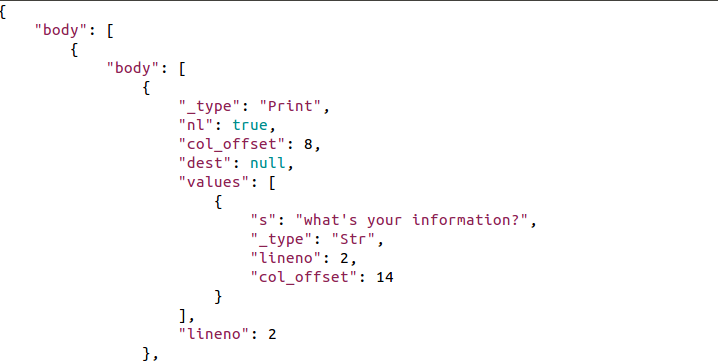

the AST module and the file ast2json.py Figure 2: Portion of a Python AST json file

And SpiderMonkey

Figure 2: Portion of a Python AST json file

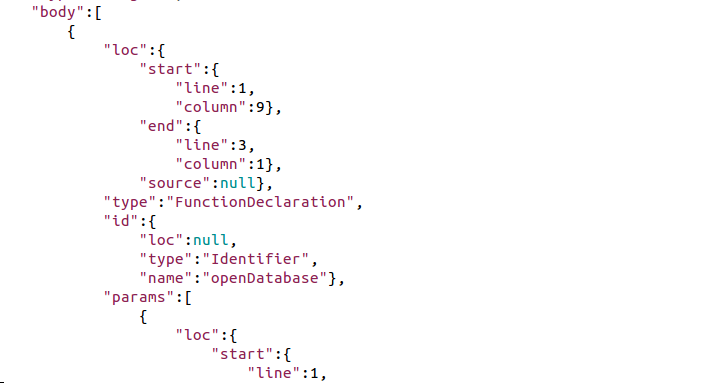

And SpiderMonkey Figure 3: Portion of a JavaScript AST json file

The AST files have very different structures for C, Python and Javascript, but the parsers are designed to handle each kind of AST differently. Those parsers filter the AST files, detecting and recording function calls and their arguments. Initially, the program is capable of detecting literals and variables as arguments. Reaching Definition Analysis has been implemented for C/C++ programs that call Python programs (but none of the other languages) to handle statically assigned variables as arguments to functions. The current version of the program handles part of the Python.h interface between C and Python. It only analyzes “PyRun_SimpleFile” calls. Other mechanisms for calling Python from C will also be implemented in the future. The version can also handle PyV8 's eval function to call a JavaScript program from Python, and JQuerry's ajaz function to call a Python program from JavaScript. In the future, the program will be able to handle cases in which a JavaScript program is called from a C program, and both JavaScript and Python functions call C programs.

Figure 3: Portion of a JavaScript AST json file

The AST files have very different structures for C, Python and Javascript, but the parsers are designed to handle each kind of AST differently. Those parsers filter the AST files, detecting and recording function calls and their arguments. Initially, the program is capable of detecting literals and variables as arguments. Reaching Definition Analysis has been implemented for C/C++ programs that call Python programs (but none of the other languages) to handle statically assigned variables as arguments to functions. The current version of the program handles part of the Python.h interface between C and Python. It only analyzes “PyRun_SimpleFile” calls. Other mechanisms for calling Python from C will also be implemented in the future. The version can also handle PyV8 's eval function to call a JavaScript program from Python, and JQuerry's ajaz function to call a Python program from JavaScript. In the future, the program will be able to handle cases in which a JavaScript program is called from a C program, and both JavaScript and Python functions call C programs.

When the designated function used to call another program of another language, such as “PyRun_SimpleFile” , JSContext().eval() or $.ajax(), is found, its argument (name of the Python or JavaScript file) is considered a function call and the executable portion of that file is represented as the main function in the original program. That creates the connection between the two files, which allows the subsequent programs to build the call graph.